Voice cloning technology has evolved from a Hollywood curiosity to an essential tool transforming industries worth over $2.7 billion annually

For decades, Val Kilmer's voice was an unmistakable instrument in Hollywood. From the cocky confidence of "Iceman" in Top Gun to the haunting baritone of Doc Holliday in Tombstone, his voice was inseparable from his identity. But in 2014, a diagnosis of throat cancer and the subsequent life-saving treatment ravaged his vocal cords, reducing his iconic voice to a rasp. For an actor whose craft depended on his voice, the loss was profound, seemingly closing a door on his career.

Then, in 2022, audiences watching Top Gun: Maverick heard the impossible: Iceman spoke again, and it was unmistakably Val Kilmer. This was not a sound-alike actor or a clever audio edit. It was the result of a painstaking collaboration with a voice cloning company that analyzed hours of Kilmer's past recordings to create a perfect, high-fidelity AI model of his voice. This resurrected voice represented a watershed moment - not just for one beloved actor, but for the very future of human identity and communication.

This guide will take you on a comprehensive journey through this fascinating landscape. We will move beyond the headlines to explore the surprising history of this technology, demystify the science that powers it, and provide a step-by-step walkthrough of how you can create your own digital voice. We'll navigate the crowded marketplace of cloning tools, dissecting their strengths and weaknesses, and confront the critical ethical and legal considerations you must understand. By the end, you will not only know how to clone a voice but also grasp the immense power and responsibility that comes with it.

From Mechanical Curiosities to Digital Doppelgangers

The Journey of the Artificial Voice

The Journey of the Artificial VoiceThe 246-Year Journey to Perfect Speech

Voice synthesis began in 1779 when Russian professor Christian Gottlieb Kratzenstein created acoustic resonators that could produce five vowels. This mechanical marvel led to Wolfgang von Kempelen's 1791 "speaking machine" in Vienna—a leather-tube contraption that established the vocal tract as the primary site of speech articulation.

The breakthrough moment came in 1939 when Homer Dudley unveiled VODER at the New York World's Fair. This first true speech synthesizer required a skilled operator using a wrist bar, foot pedal, and ten finger-controlled filters to produce intelligible speech. Visitors waited in long lines to hear a machine "speak," witnessing the birth of artificial voice technology.

For decades, progress crawled. The 1970s brought formant synthesis systems that could achieve human-like quality only through painstaking manual parameter adjustment. Dennis Klatt's 1981 system became the voice of Stephen Hawking, demonstrating both the technology's potential and its limitations—robotic, monotone, but functional.

Everything changed on September 8, 2016. Google DeepMind published "WaveNet: A Generative Model for Raw Audio," introducing neural networks that could generate speech by predicting individual audio samples. WaveNet reduced the gap between human and synthetic speech by 50% in a single breakthrough, achieving what decades of traditional approaches couldn't accomplish.

The revolution accelerated rapidly:

- 2017: Google's Tacotron achieved 4.53 out of 5.0 on human quality ratings

- 2018: Baidu demonstrated voice cloning from just 3.7 seconds of audio

- 2020: MIT researchers achieved high-quality cloning from 15 seconds of training data

- 2024: ElevenLabs and others deployed commercial systems requiring only 1 minute of audio for professional-grade voice cloning

The $25 Billion Market Nobody Saw Coming

Reality Check: The Numbers Behind the Hype

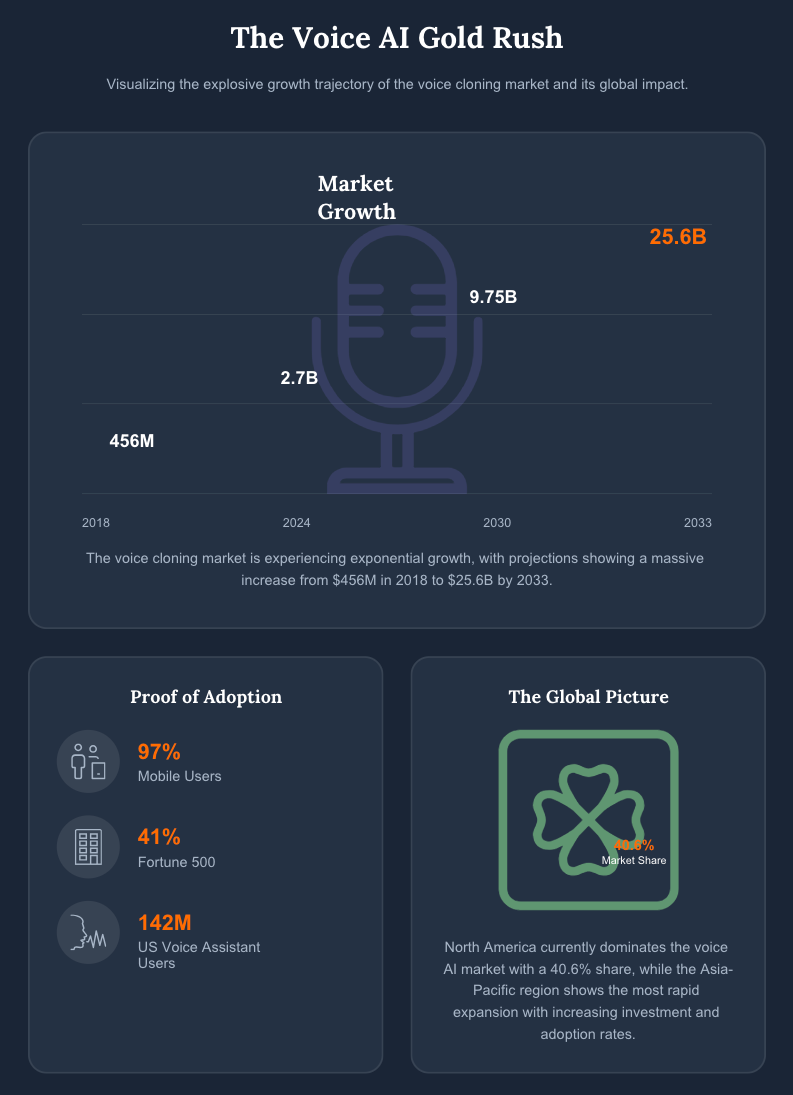

MYTH: Voice cloning is a niche technology for tech enthusiasts. REALITY: The market grew from $456 million in 2018 to $2.7 billion in 2024, representing 490% growth in just six years. Conservative projections show $9.75 billion by 2030, while aggressive estimates suggest $25.6 billion by 2033.

The statistics reveal explosive adoption:

- 142 million Americans used AI voice assistants in 2022

- 97% of mobile users utilize AI-powered voice features

- 41% of Fortune 500 employees have used ElevenLabs technology

- 55% of companies currently use AI technologies, with voice applications leading enterprise adoption

Geographic distribution shows global demand: North America dominates with 40.6% market share, while Asia-Pacific represents the fastest-growing region. The broader AI voice generator market, valued at $3.5 billion in 2023, is projected to reach $21.75 billion by 2030.

Investment flows confirm the opportunity. ElevenLabs raised $80 million at a $1.1 billion valuation in January 2024. PlayAI secured $21 million in November 2024 (reportedly from Saudi Arabia), and aquired by Meta in 2025. Overall AI funding exceeded $100 billion globally in 2024, with voice AI capturing significant attention from tier-one venture firms.

From the Trenches: Real Users Tell Their Stories

The Content Creator Revolution

Christopher Kokoski, writing on Medium, tested voice cloning for the first time: "The damn voice sounded almost just like me. The cloned voice had the same pitch and pacing. It even captured the subtle ways I stress certain words. The laugh lines and seriousness of my voice were all there... It wasn't just life-like. It was alive with all the quirks of my speech."

The JOLLY YouTube channel secretly used Respeecher to create a 2.5-hour audiobook using their co-host's cloned voice. Despite production challenges during wartime in Ukraine, the project maintained consistent emotional accents and character differentiation throughout, demonstrating commercial-grade capabilities.

One ElevenLabs user reported dramatic cost savings: "For me, the cost of a voice actor would be $200 an hour as opposed to the $13 an hour ElevenLabs is costing me. That's a serious discount for what I would consider 80% of the quality of a human for a sixteenth of the price."

Business Applications Drive Adoption

A CEO in the animation industry noted: "My experience with ElevenLabs has been positive overall. The platform offers cutting-edge AI voice generation technology, allowing users like me to create natural-sounding voices for various applications, such as chatbots, customer support, and voiceovers."

Enterprise deployments are scaling rapidly. Respeecher works with Netflix, Universal Pictures, and Paramount for film dubbing and character voice preservation. Corporate training departments report 70-80% time savings when localizing content across multiple languages using consistent voice cloning.

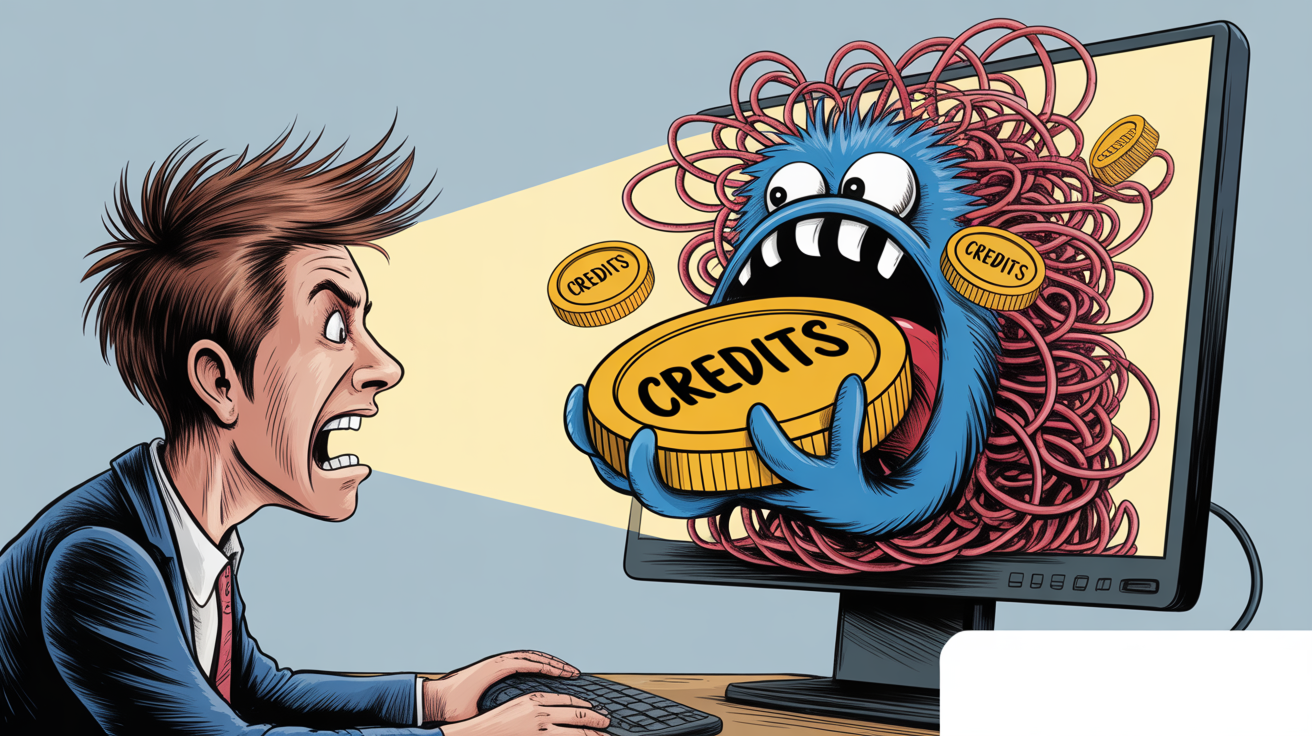

The Fine Print: When Technology Disappoints

Not every experience proves positive. A June 2025 user review revealed frustrations: "The voice cloning is poor; even after providing many samples, it sounds horrifically fake. The text-to-speech conversation eats your credits even when ElevenLabs output is weird, long pauses, a total change in volume, voice changes, slowing down at random."

Tiago Berezoski, a motion designer, identified common challenges: "The only tricky part is about getting the right tone, sometimes you need to split the phrases and also every time you hit the recording button it shows a different tone of voice."

Billing and subscription issues plague multiple platforms. Users report unexpected charges, credit losses, and account lockouts. One complaint detailed: "I purchased a monthly subscription on May 17th, 2025, that included 100,000 credits. I used part of it but still had 57,000 credits remaining. Suddenly, my account was blocked from accessing the remaining credits unless I renewed the subscription."

The Complete Platform Landscape: Your Decision Guide

Tier 1: The Quality Leaders

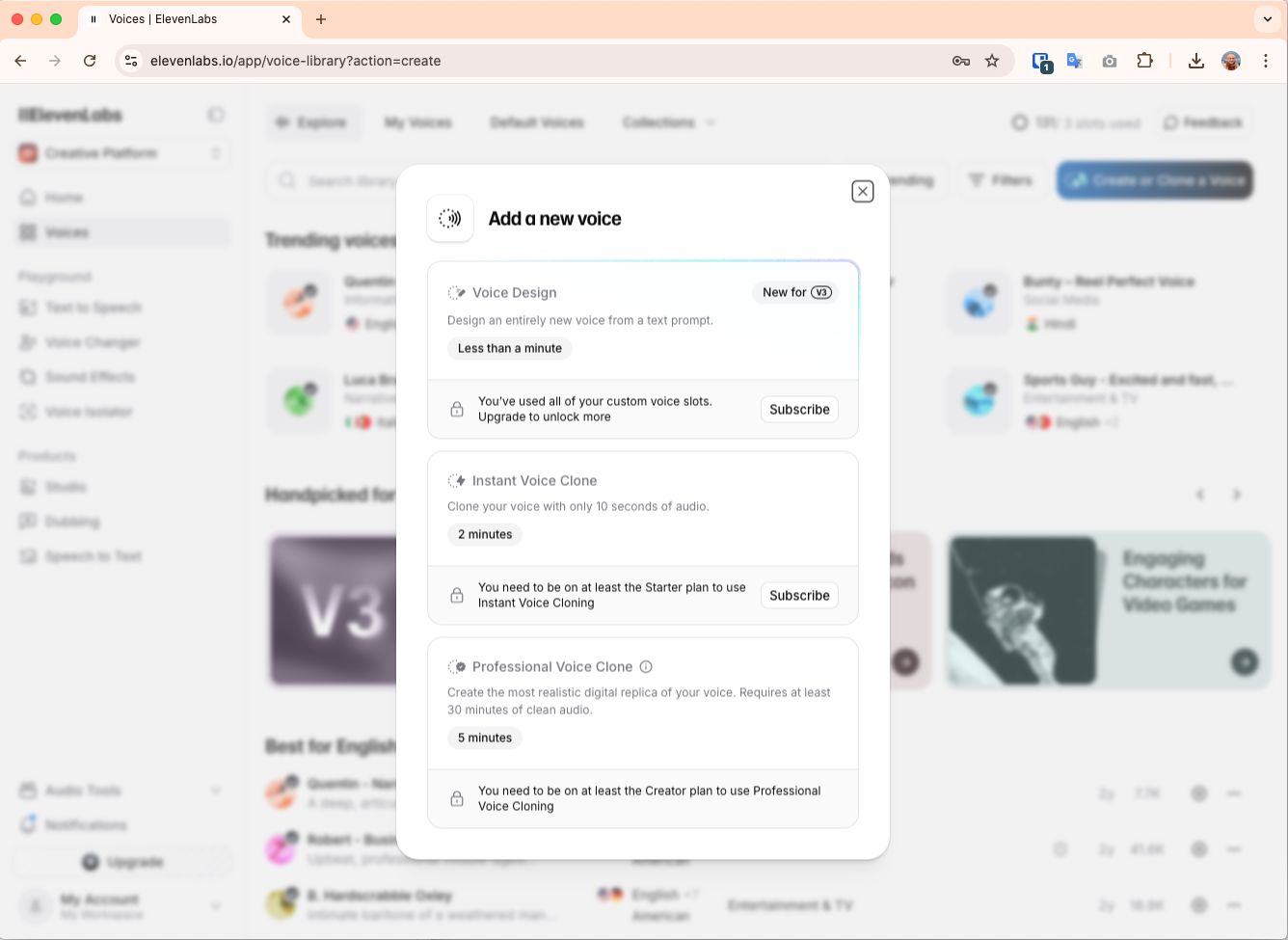

ElevenLabs dominates voice realism with 4.7/5 user ratings and the most natural-sounding output available. Their technology supports 32 languages and offers both instant cloning (1-minute minimum) and professional cloning (30 minutes-3 hours optimal). Pricing starts at $5/month, with a generous free tier providing 10,000 characters monthly.

Elevenlabs

ElevenlabsWellSaid Labs targets enterprise customers with SOC2 Type 2 compliance and GDPR adherence. Their 120+ English voices achieve 4.6/5 quality ratings with enterprise-grade reliability. At $89/month minimum, they're expensive but trusted by Fortune 500 companies for mission-critical applications.

https://www.wellsaid.io

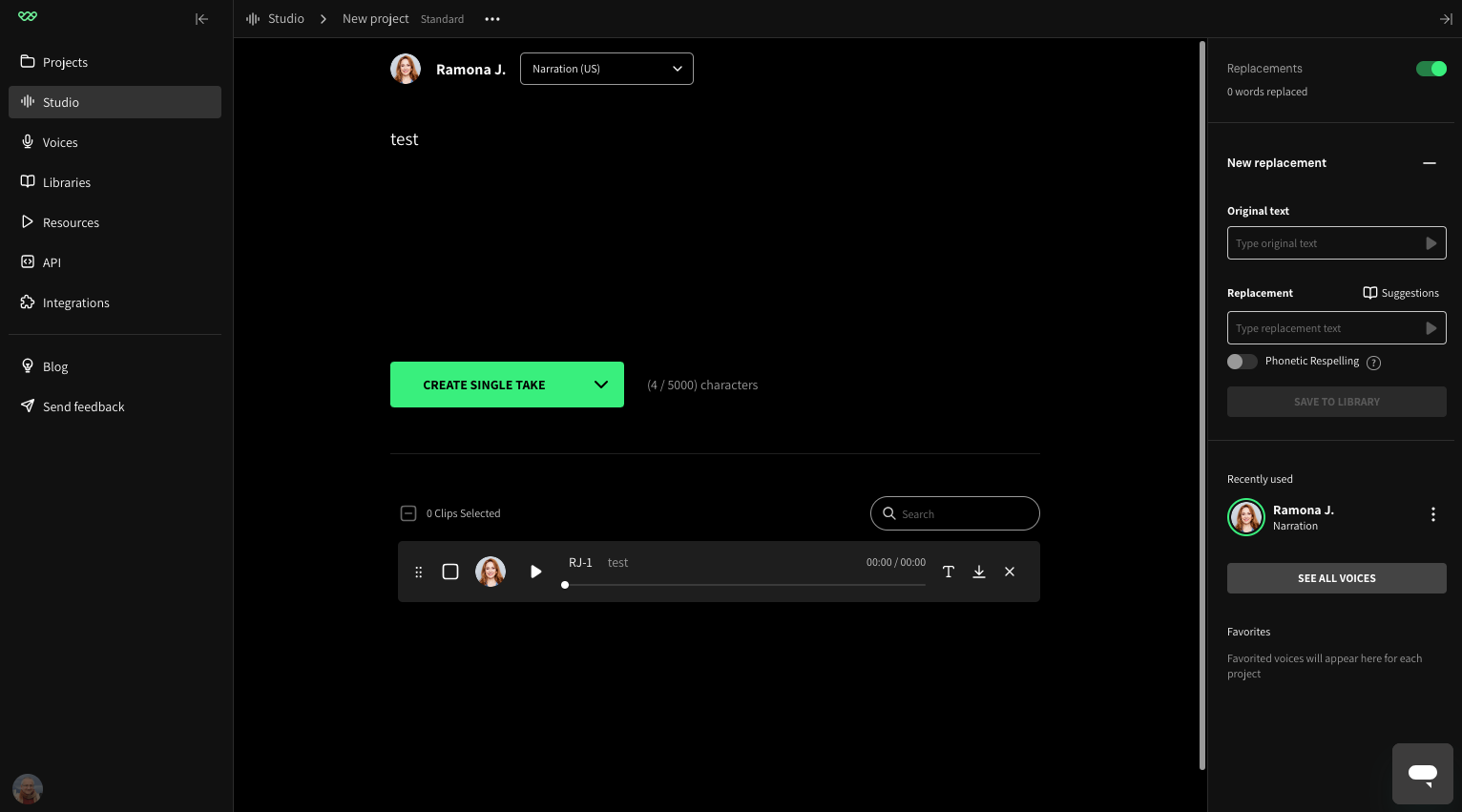

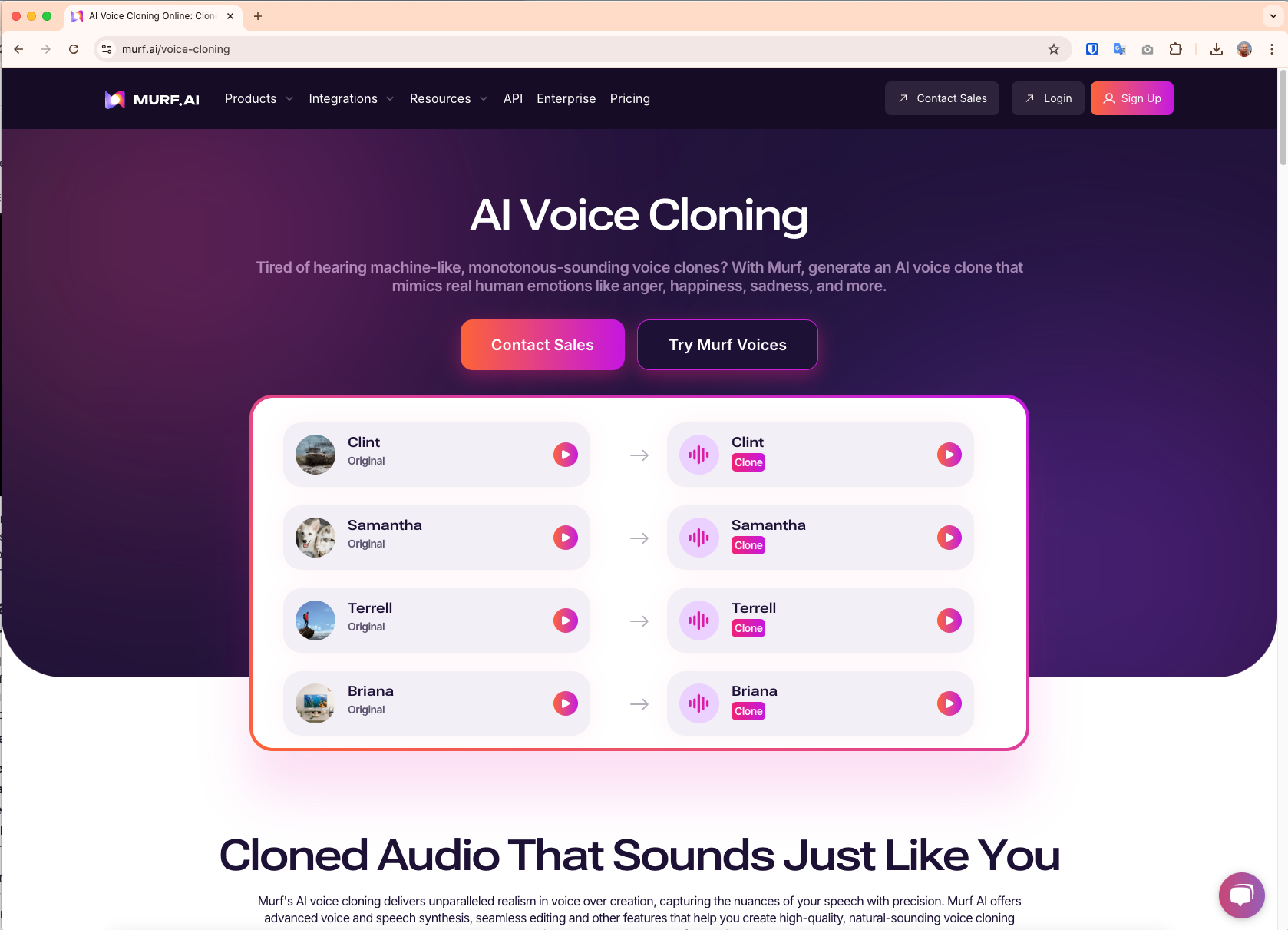

https://www.wellsaid.ioMurf AI excels in business applications with integrated collaboration tools, background music mixing, and Adobe Captivate integration. Their $29/month Creator plan provides 200+ voices across 20+ languages, making them ideal for corporate training and marketing teams.

murf ai

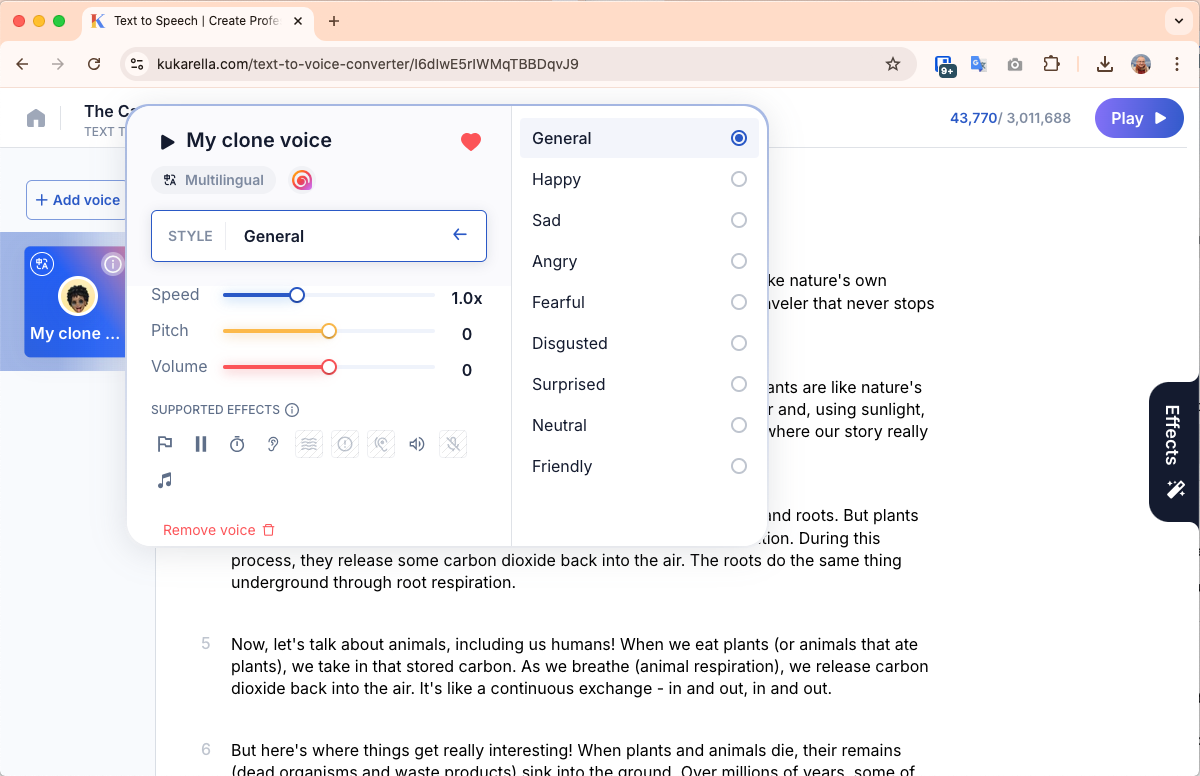

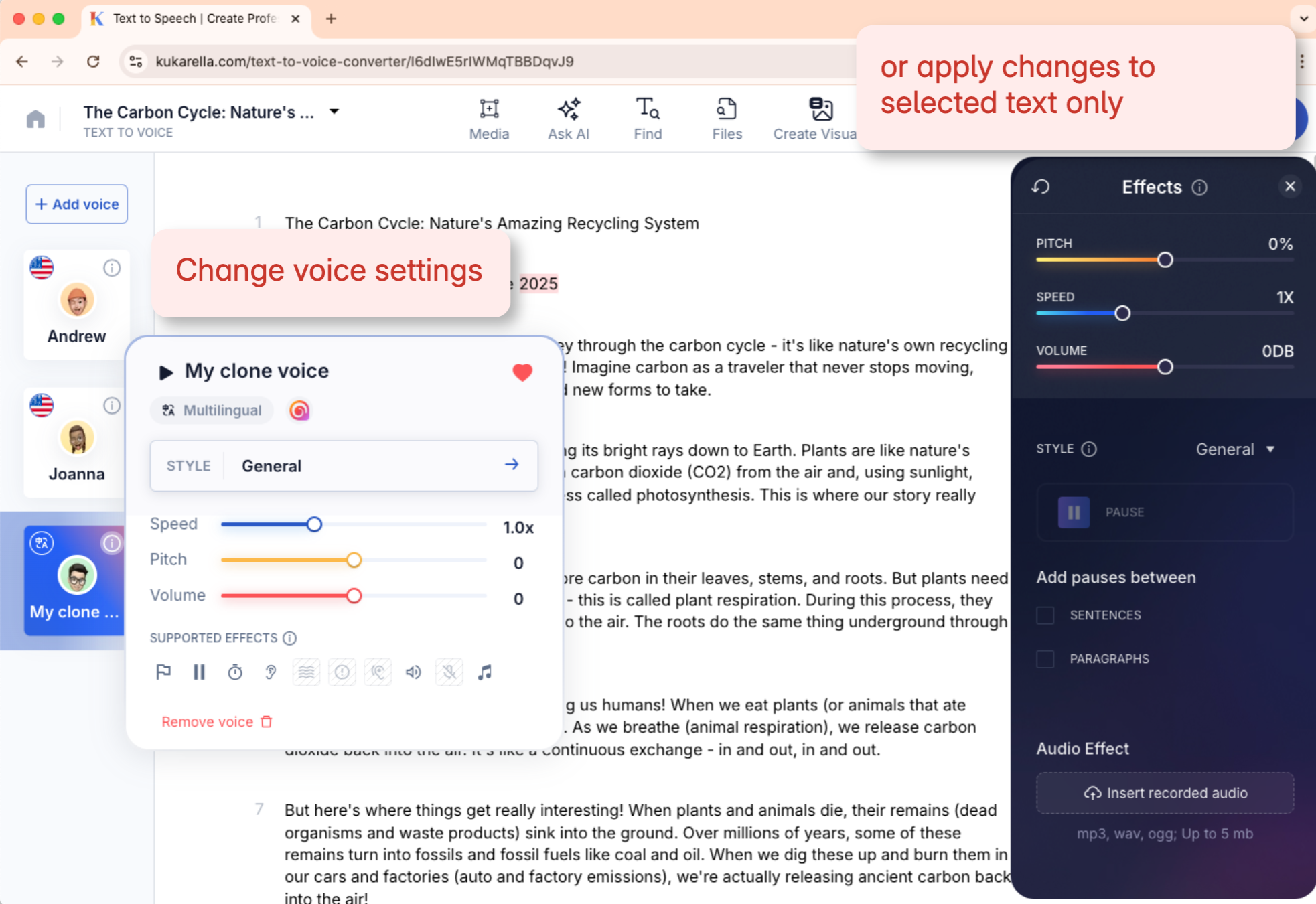

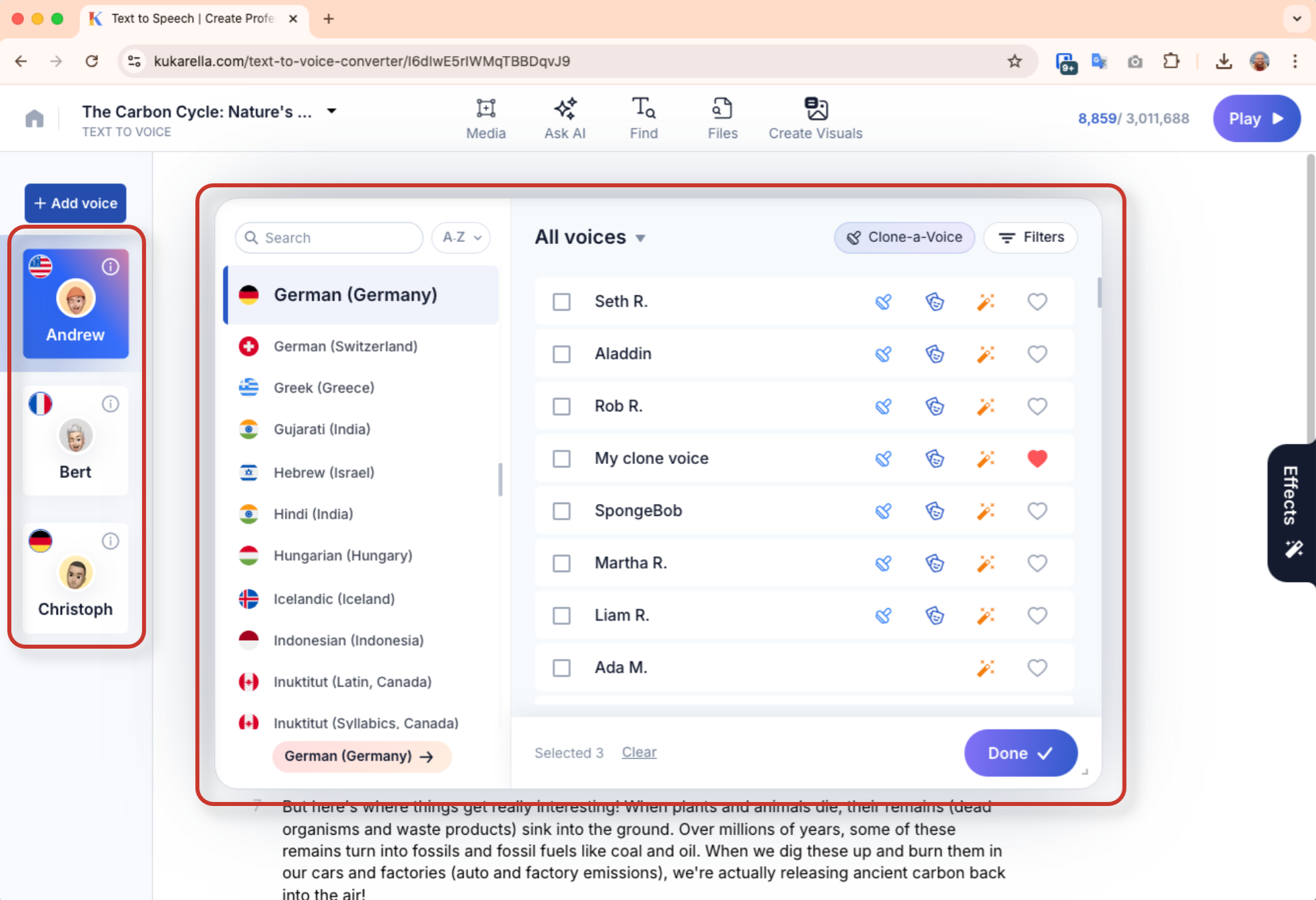

murf ai The Kukarella Advantage: Emotional Intelligence Meets Multilingual Mastery

Kukarella stands apart through unique emotional styling capabilities. While most platforms focus purely on voice replication, the voices cloned with Kukarella, include advanced emotional controls - whispers, sighs, pauses, and emphatic speech patterns that bring content to life.

Kukarella clone voice

Kukarella clone voiceKukarella's innovative features include:

- AI Dialogue Creator integrating with GPT for character conversations

- Visuals Creator creating images and video clips to illustrate voiceover

- Transcribe Hub AI powered speech recognition

- AI writing assistants helping with content creation and editing

- Cloud TTS Integration accessing Amazon, Google, OpenAI, IBM, and Microsoft voice libraries simultaneously

At 4.4/5 user ratings, Kukarella users praise exceptional customer service and versatility. Their free plan offers full features with usage limits, making them accessible for creators testing voice cloning capabilities.

Feature Comparison Matrix

| Platform | Voice Quality | Languages | Voice Cloning | Pricing (Entry) | Best For |

| ElevenLabs | ⭐⭐⭐⭐⭐ | 32 | Professional/Instant | $5/month | Audiobooks, Podcasts |

| WellSaid Labs | ⭐⭐⭐⭐⭐ | English | Custom Program | $89/month | Enterprise Compliance |

| Kukarella | ⭐⭐⭐⭐⭐ | 55+ | Emotional Styles | Free/Paid | Educational, Multilingual |

| Murf AI | ⭐⭐⭐⭐⭐ | 20+ | Advanced | $29/month | Business Content |

| Synthesia | ⭐⭐⭐⭐ | 140+ | Avatar-integrated | $20/month | Video Content |

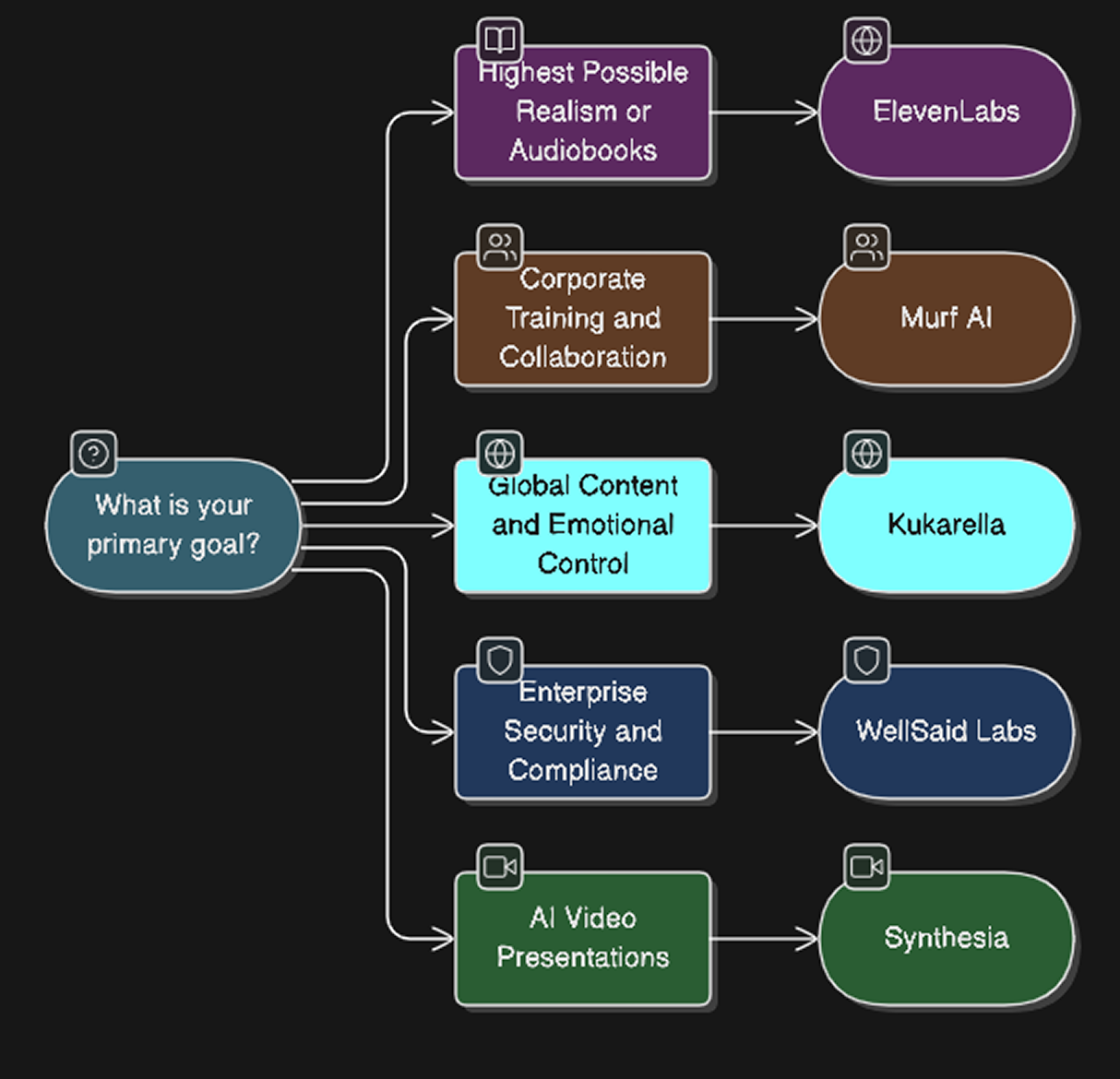

Decision Tree: Choosing Your Platform

Decision Tree: Choosing Your Platform

Decision Tree: Choosing Your Platform- For Audiobook Production: ElevenLabs provides the most natural, engaging narration with emotional depth.

- For Business Training: Murf AI's collaboration features and professional voices serve corporate needs effectively.

- For Global Content Creation: Kukarella's 55+ languages with emotional styling enable authentic multilingual storytelling.

- For Enterprise Compliance: WellSaid Labs meets security requirements with enterprise-grade reliability.

- For Video-First Content: Synthesia integrates AI avatars with voice synchronization for presentations.

Advanced Strategies: Maximizing Your Voice Cloning Results

Do's and Don'ts

Do's and Don'tsPower User Techniques

- Audio Quality Optimization: Record training samples in quiet environments using lossless formats. Professional results require 30 minutes to 3 hours of clean audio covering diverse emotional ranges, speaking speeds, and contextual variations.

- Multi-Platform Approach: Advanced users combine platforms strategically—using ElevenLabs for primary narration, Kukarella for character dialogues with emotional depth, and WellSaid Labs for corporate compliance requirements.

- Voice Consistency Maintenance: Create detailed pronunciation guides for technical terms, proper nouns, and brand names. Most platforms allow custom pronunciation dictionaries that ensure consistency across projects.

- Emotional Range Development: Train voice clones using content samples that demonstrate various emotional states—excitement, concern, authority, warmth. This creates more versatile synthetic voices capable of matching content tone.

Automation and Scaling

- API Integration Strategies: ElevenLabs, Resemble AI, and Murf AI offer robust APIs enabling automated content production workflows. Developers integrate voice cloning into content management systems, reducing manual intervention.

- Batch Processing Optimization: Process multiple scripts simultaneously during off-peak hours to maximize platform credits and minimize costs. Most platforms offer volume discounts for consistent usage patterns.

- Quality Control Workflows: Establish systematic review processes including human verification for client-facing content, automated flagging of pronunciation errors, and A/B testing different emotional settings for optimal audience engagement.

Troubleshooting and Edge Cases

Common Pitfalls and Solutions

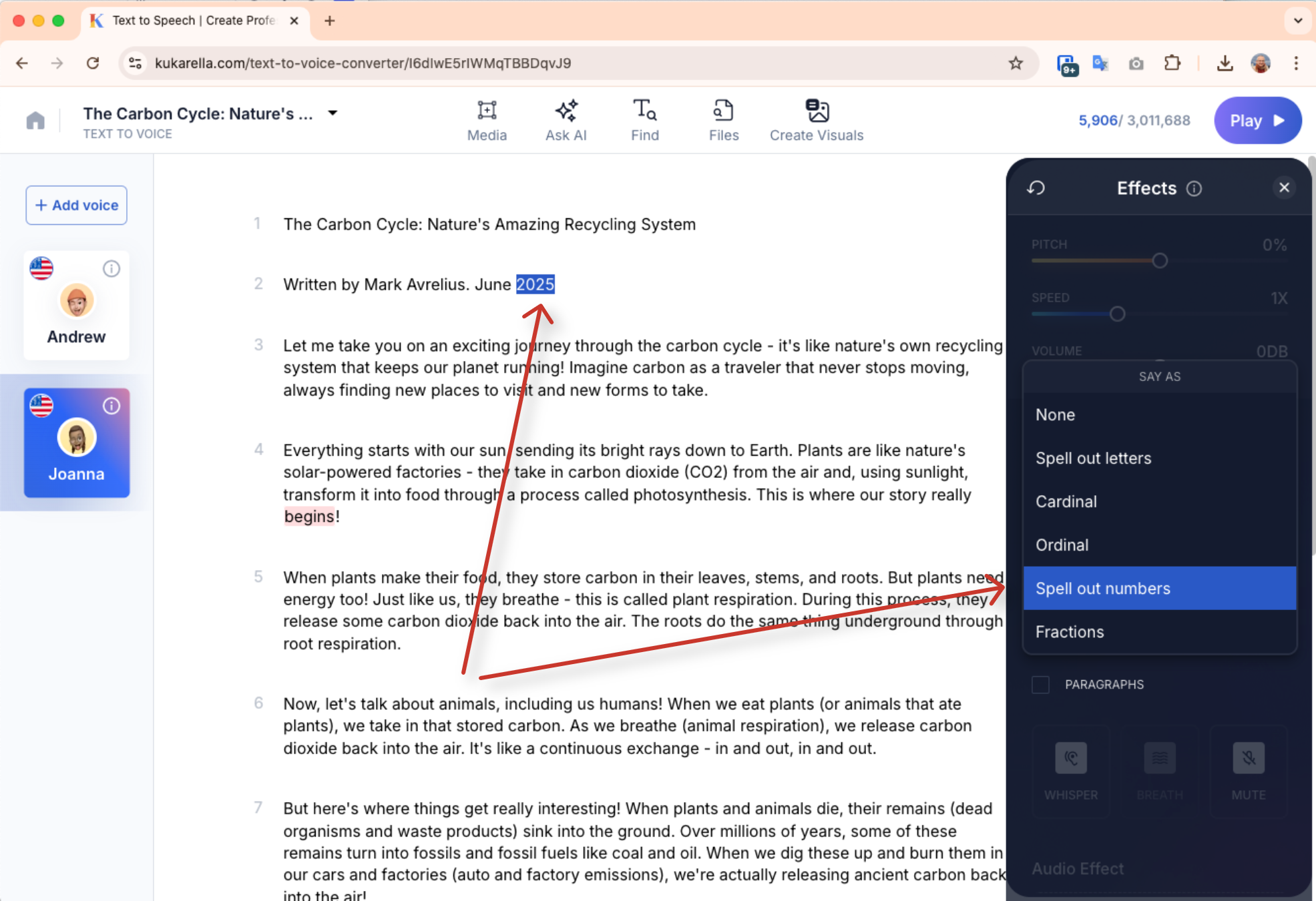

- The Pronunciation Problem: Numbers and dates frequently challenge voice cloning systems.

- Solution: Write out numbers as words ("two thousand twenty-five" instead of "2025") and use platform-specific pronunciation guides.

- Volume and Pacing Inconsistencies: Long paragraphs often produce volume fluctuations and unnatural pacing.

- Solution: Break content into 2-3 sentence segments and use SSML markup for precise control when available, or use effects controls.

voice settings

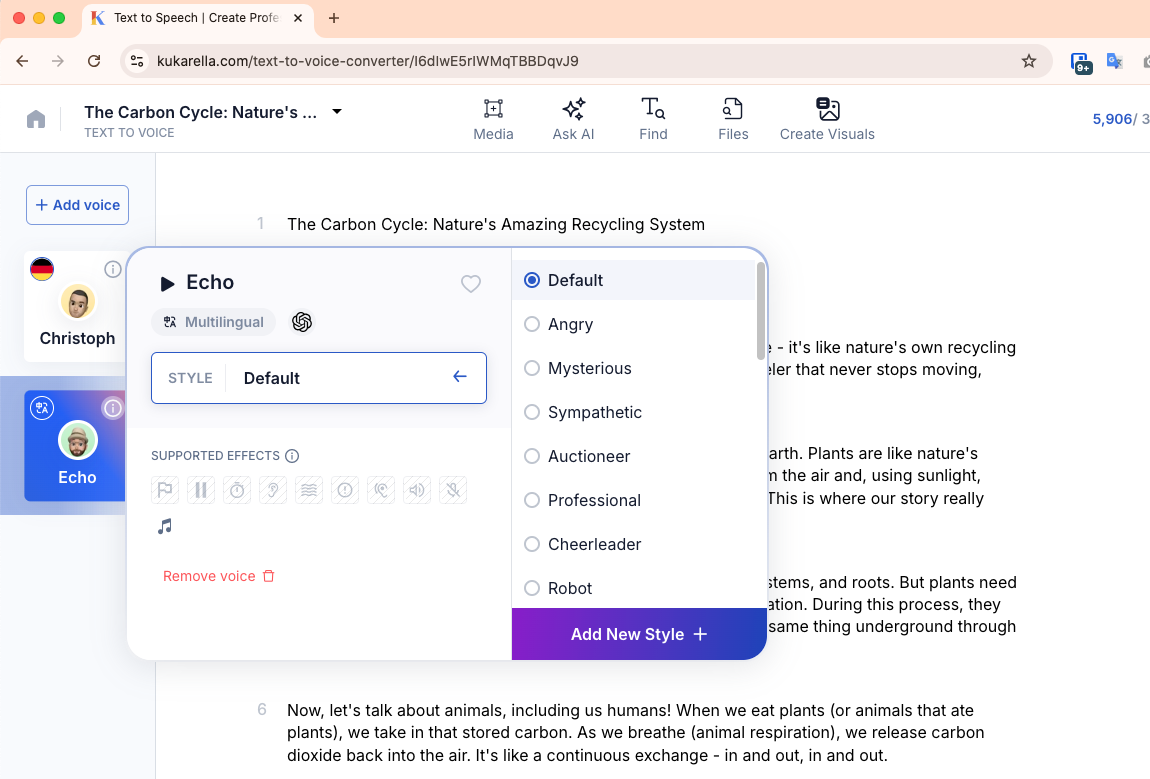

voice settings- Emotional Discontinuity: Switching between emotional tones within single audio files creates jarring transitions.

- Solution: Generate separate audio files for different emotional sections and blend using audio editing software, or apply emotional styles to voices from Kukarella's library of voices.

Emotional styles

Emotional styles- Language Mixing Limitations: Most platforms struggle with mid-sentence language switching.

- Solution: Use dedicated multilingual platforms like Kukarella or generate separate audio tracks for each language.

The Fine Print: Hidden Costs and Legal Considerations

- Credit Consumption Varies Dramatically: Text complexity, voice quality settings, and regeneration attempts affect credit usage unpredictably. Budget 20-30% additional credits for production work.

- Licensing and Rights Management: Enterprise applications require clear voice usage rights. Some platforms retain rights to generated audio, while others offer exclusive licensing for additional fees.

- Compliance Requirements: Healthcare, financial services, and educational institutions must verify platform security certifications, data handling practices, and international privacy regulation compliance.

The Future Soundscape: Emerging Trends and Predictions

Plot Twist: The Unexpected Trajectories

Cross-Language Voice Preservation: ElevenLabs CEO Mati Staniszewski predicts universal language barriers dissolving: "You will be able to go to another country and interact with another person while still carrying your own voice, your own emotion, intonation, and the person can understand you... I think Babel Fish will be there and the technology will make it possible."

Educational Transformation: Personalized learning assistants using familiar voices—teachers, parents, or fictional characters—will guide individual educational journeys. Kukarella's dialogue creation features anticipate this trend, enabling complex character interactions for educational storytelling.

Cross-Language Voice Preservation

Cross-Language Voice PreservationMedical Voice Banking: Individuals with degenerative diseases increasingly preserve their voices before speech loss. Google DeepMind's work with ALS patient Tim Shaw demonstrated profound emotional impact: "It has been so long since I've sounded like that, I feel like a new person. I felt like a missing part was put back in place."

voice banking

voice bankingRegulatory Landscape Evolution

Government agencies are preparing comprehensive frameworks. The FTC has launched challenges to develop voice authentication systems, while European regulators consider voice cloning under GDPR biometric data protections.

Industry self-regulation accelerates. Leading platforms implement watermarking technologies, consent verification systems, and detection algorithms. OpenAI's approach emphasizes: "We recognize that generating speech that resembles people's voices has serious risks, which are especially top of mind in an election year."

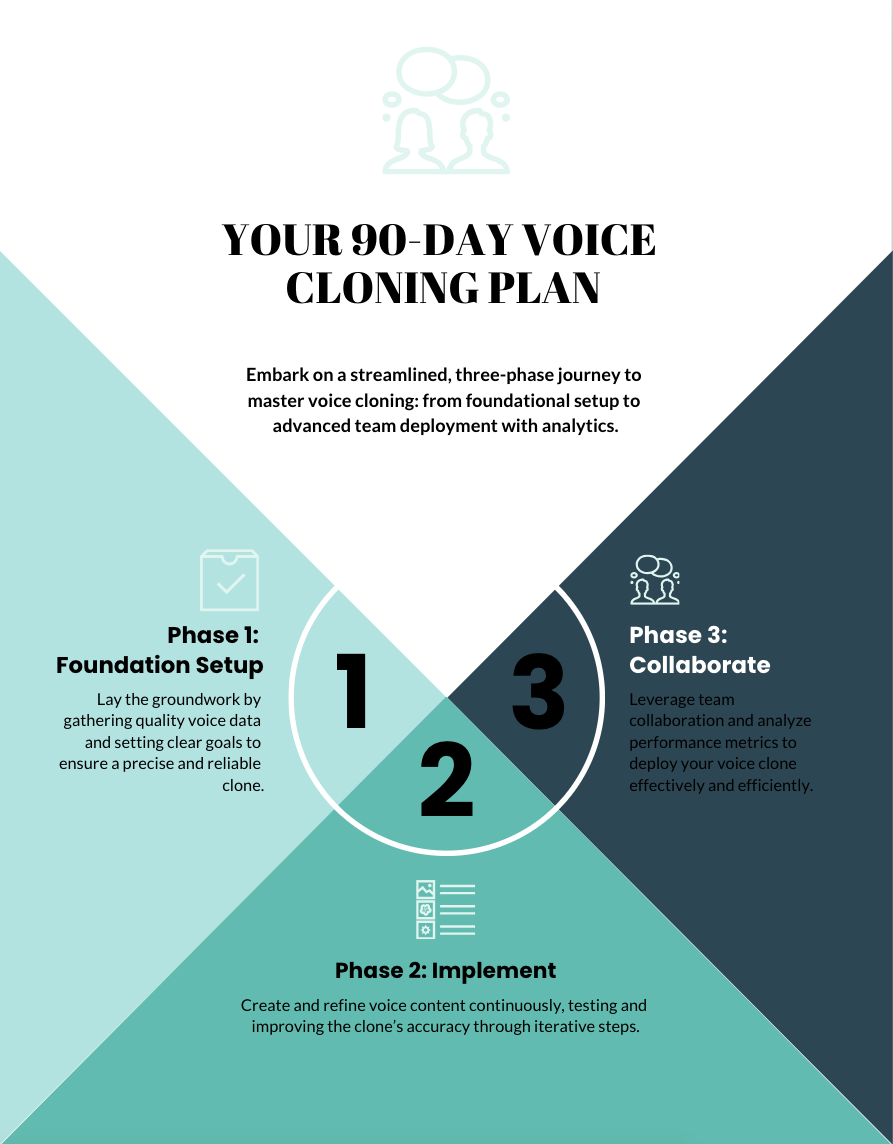

Your Voice Cloning Action Plan

Phase 1: Foundation Setting (Week 1)

- Choose Your Primary Platform: Test free tiers of ElevenLabs, Kukarella, and Murf AI;

- Record High-Quality Samples: Create 30-60 minutes of clean, diverse speech content;

- Define Use Cases: Identify specific applications (audiobooks, training, marketing);

- Establish Quality Standards: Set benchmarks for acceptable voice similarity and naturalness.

Phase 2: Implementation (Weeks 2-4)

- Train Your Voice Clone: Upload samples and optimize platform settings

- Create Test Content: Generate sample audio across different content types

- Iterate and Improve: Refine pronunciation guides and emotional settings

- Scale Production: Implement batch processing and workflow automation

Phase 3: Advanced Deployment (Month 2+)

- Multi-Platform Integration: Leverage specialized platforms for specific use cases

- Team Training: Educate content creators on voice cloning best practices

- Quality Assurance: Implement systematic review and approval processes

- Performance Monitoring: Track audience engagement and production efficiency.

Your 90-Day Voice Cloning Plan

Your 90-Day Voice Cloning PlanEssential Considerations Checklist

✓ Legal Compliance: Verify voice usage rights and consent requirements

✓ Budget Planning: Account for credit consumption variability and platform scaling costs

✓ Technical Integration: Ensure compatibility with existing content production workflows

✓ Ethical Guidelines: Establish clear policies for voice cloning usage and disclosure

✓ Backup Strategies: Maintain multiple platform relationships to avoid single-point failures

Conclusion: Your Voice, Amplified and Unlimited

Voice cloning technology has matured from experimental curiosity to production-ready tool, democratizing content creation for millions while generating billions in economic value. Whether you're preserving family voices, scaling educational content, or building global media empires, AI voice cloning offers unprecedented creative possibilities.

The key to success lies in thoughtful platform selection, ethical implementation, and strategic application. ElevenLabs leads in quality and reliability, WellSaid Labs dominates enterprise compliance, while Kukarella excels in multilingual emotional expression. Each serves distinct needs within the expanding voice cloning ecosystem.

The future belongs to creators who embrace these tools responsibly. As Mati Staniszewski envisions, voice will become the primary interface for human-technology interaction, breaking down language barriers and enabling more authentic digital communication than ever before possible.

Your voice—preserved, enhanced, and infinitely scalable—awaits. The question isn't whether to adopt voice cloning technology, but how quickly you can harness its transformative potential for your unique creative vision.

FAQ Section:

Q: How much audio do I need to clone a voice effectively?

- A: Modern systems require as little as 1 minute for basic cloning, though 30 minutes to 3 hours produces professional results.

Q: Can voice cloning work across different languages?

- A: Advanced platforms like Kukarella support cross-language cloning across 55+ languages while preserving vocal characteristics.

Q: What legal considerations apply to voice cloning?

- A: Always obtain explicit consent from voice owners, verify usage rights, and comply with platform terms regarding commercial applications.

Q: How do I prevent my voice from being cloned without permission?

- A: Use privacy settings on social media, avoid posting long audio samples publicly, and consider voice authentication systems for sensitive accounts.

Q: Which industries benefit most from voice cloning?

- A: Education, entertainment, accessibility technology, corporate training, and content localization show highest adoption rates.

Q: How accurate is current voice cloning technology?

- A: Leading platforms achieve 4.5+ out of 5.0 human similarity ratings, with some implementations indistinguishable from original voices.

Q: What are the main limitations of voice cloning?

- A: Challenges include emotional range consistency, pronunciation of technical terms, real-time generation latency, and cross-language mixing capabilities.