Ever felt that uncanny valley chill of a voice clone that’s almost perfect? You’re not alone. Content creator ‘Alina’ spent days meticulously recording audio for her new YouTube channel, only to find her AI-cloned voice sounded… well, robotic. It lacked the warmth and personality of her own voice. It was a disheartening experience, one that many creators face when they first delve into the world of AI voice generation.

This guide is for Alina and for everyone who has hit a roadblock. We'll explore why these issues happen and provide a detailed, tool-oriented approach to fixing them, ensuring your digital voice is as engaging and authentic as your own.

Deep Dive: Unpacking and Solving Common Voice Cloning Problems

Let's dissect the most frequent complaints about voice cloning and explore actionable solutions. But first - a poll:

Problem 1: "My Voice Clone Sounds Robotic and Monotone"

This is the number one frustration. The clone lacks the natural pitch variations—the "music"—of human speech.

Why it happens:

- Poor Quality Input Audio: Background noise, echo, and inconsistent volume flatten the output.

- Monotonous Recording: You read your script like a robot, so the AI learns you're a robot.

- Insufficient Data: Not enough audio for the AI to learn your full vocal range.

How to fix it:

- Optimize Your Recording Environment: A quiet room and a quality microphone are non-negotiable. (Refer to our "Audio Preparation Masterclass" for a deep dive).

- Inject Emotion into Your Recording: Speak naturally, with the energy you want your clone to have. Tell a story. Argue a point. The more emotional variance you provide, the richer the AI's dataset will be.

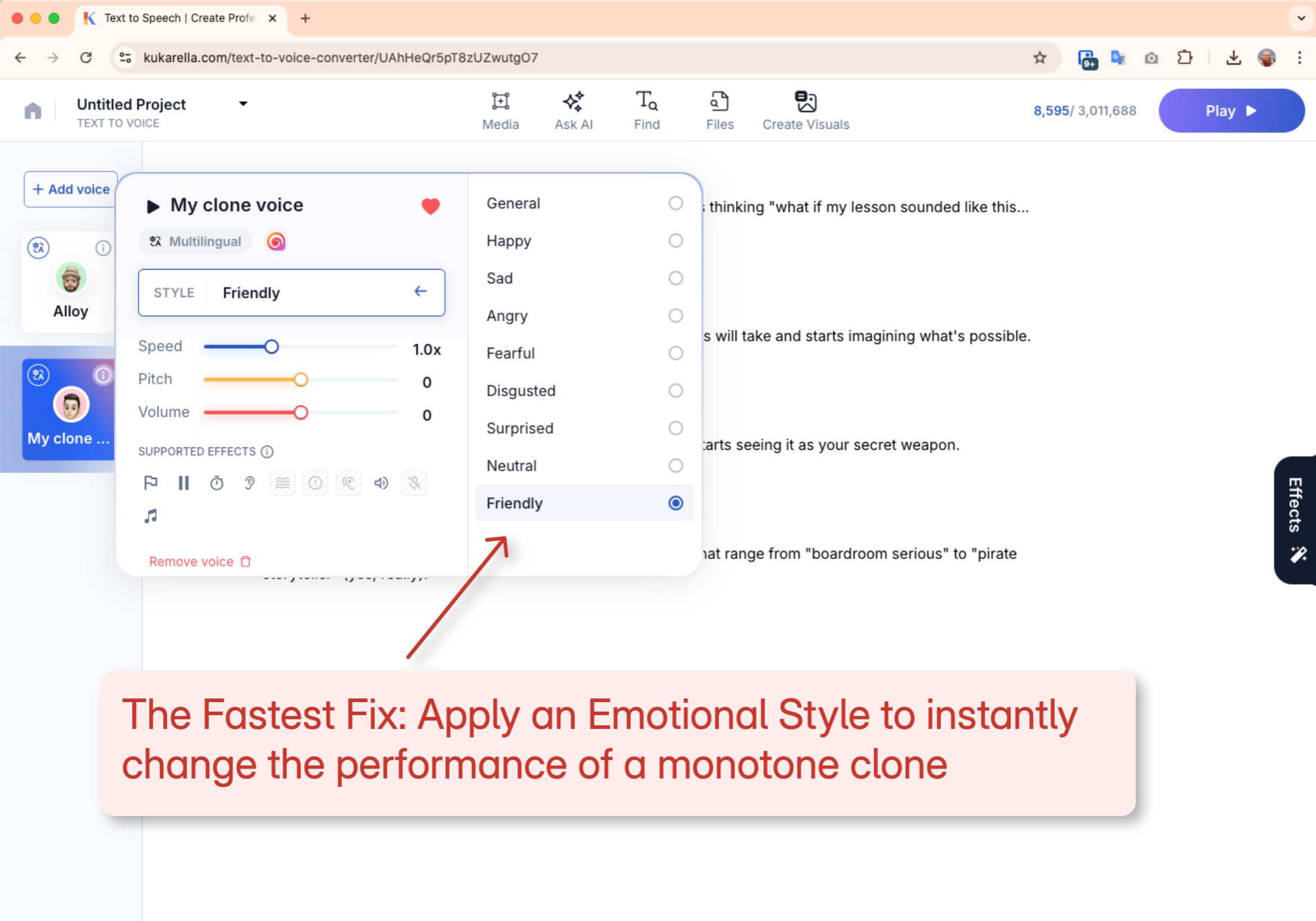

Leverage Advanced Platform Features:

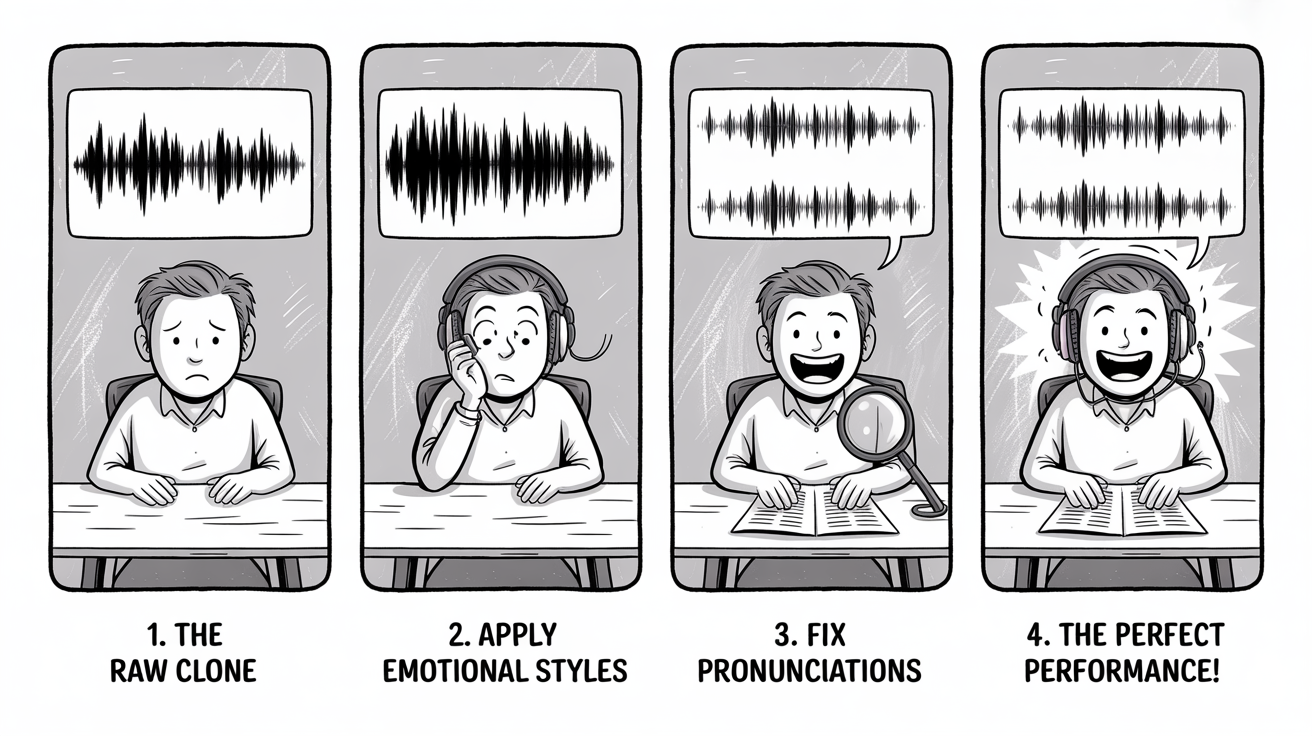

Kukarella's Emotional Styles: Kukarella offers a significant advantage here - it allows you to apply various emotional styles (like "Cheerful," "Angry," or "Whispering") to your cloned voice. This means that even from a single, neutral recording, you can generate speech with a wide range of emotions, directly combating the monotone problem. You can even create custom voice styles with a simple text prompt, offering unparalleled control over the clone's emotional delivery.

Problem 2: "The Emotional Tone is Wrong or Missing"

Your voice clone might sound clear but lack the intended emotion for a specific context. A Reddit user on r/ElevenLabs noted that some clones of older voices sounded "too perfect," as if the AI had restored their voice to a younger, less character-filled version.

Why it happens:

- Mismatched Training Data: You recorded a calm, meditative reading to train a clone you now want to use for an energetic marketing video.

- Overly "Perfected" Clones: The AI can sometimes "smooth out" the unique, emotional imperfections of a voice, making it sterile.

How to fix it:

- Match Recording Style to Intended Use: If you need a clone for audiobook narration, your training data should be you reading a book.

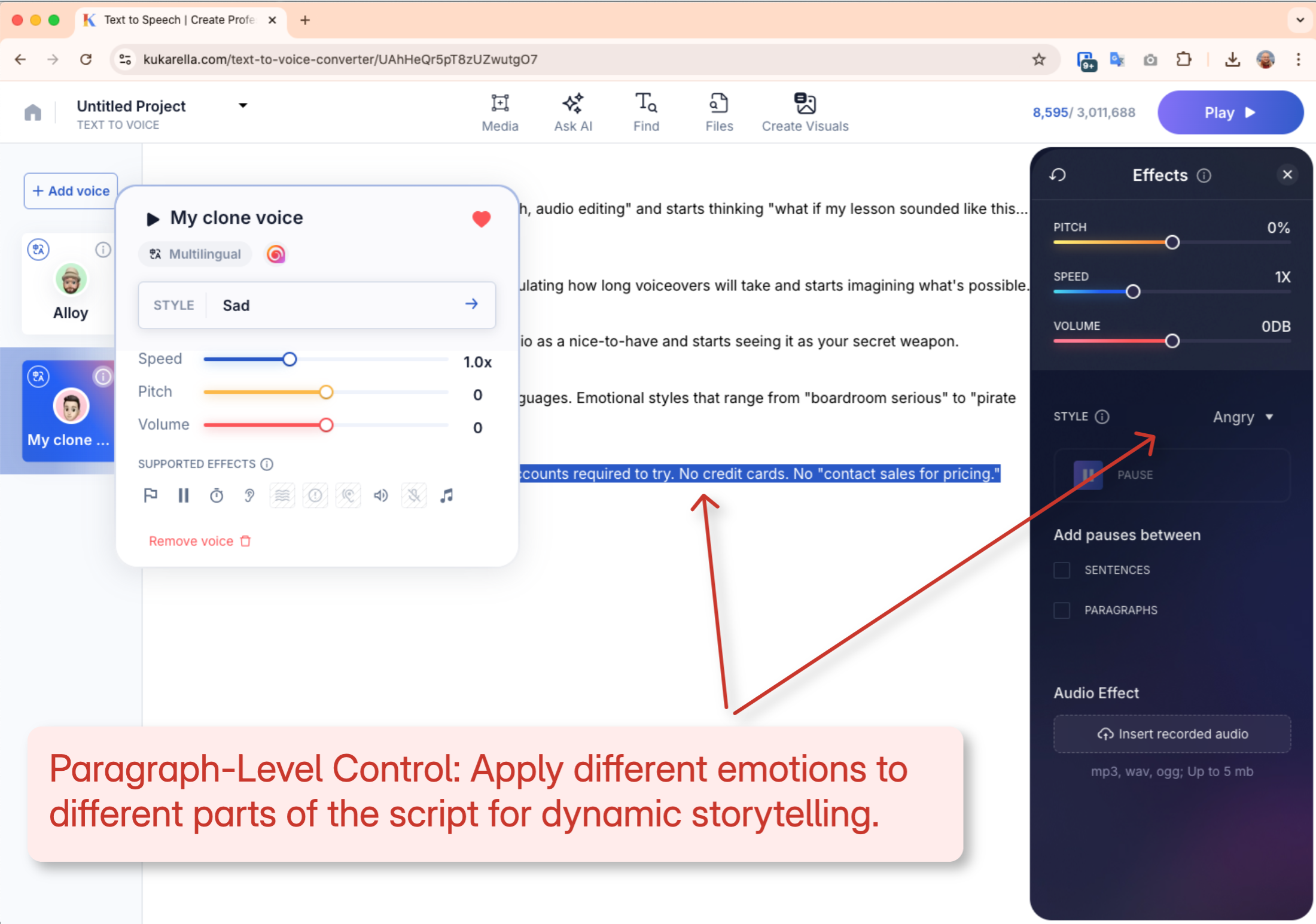

- Utilize Post-Cloning Emotional Controls: This is where your choice of platform becomes critical.

Kukarella's Granular Control: This is another area where Kukarella excels. Their "Voice Styles" can be applied at the paragraph level, meaning you can have a single script with varying emotional tones. For instance, a character in a story could speak with a "Sad" tone in one paragraph and an "Angry" tone in the next, all from the same base voice clone. This level of granular control is a powerful tool for creating dynamic and engaging audio.

Problem 3: "My Voice Clone Mispronounces Words or Has an Unnatural Cadence"

Incorrect pronunciations, especially of unique names or jargon, and awkward pacing can instantly break the illusion of a real human voice.

Why it happens:

- Unfamiliar Vocabulary: The AI may not have been trained on specific technical terms, brand names, or slang, leading to mispronunciations.

- Punctuation and Pacing: The way you use punctuation in your script heavily influences the clone's cadence. Long, unbroken sentences can lead to a rushed, unnatural delivery.

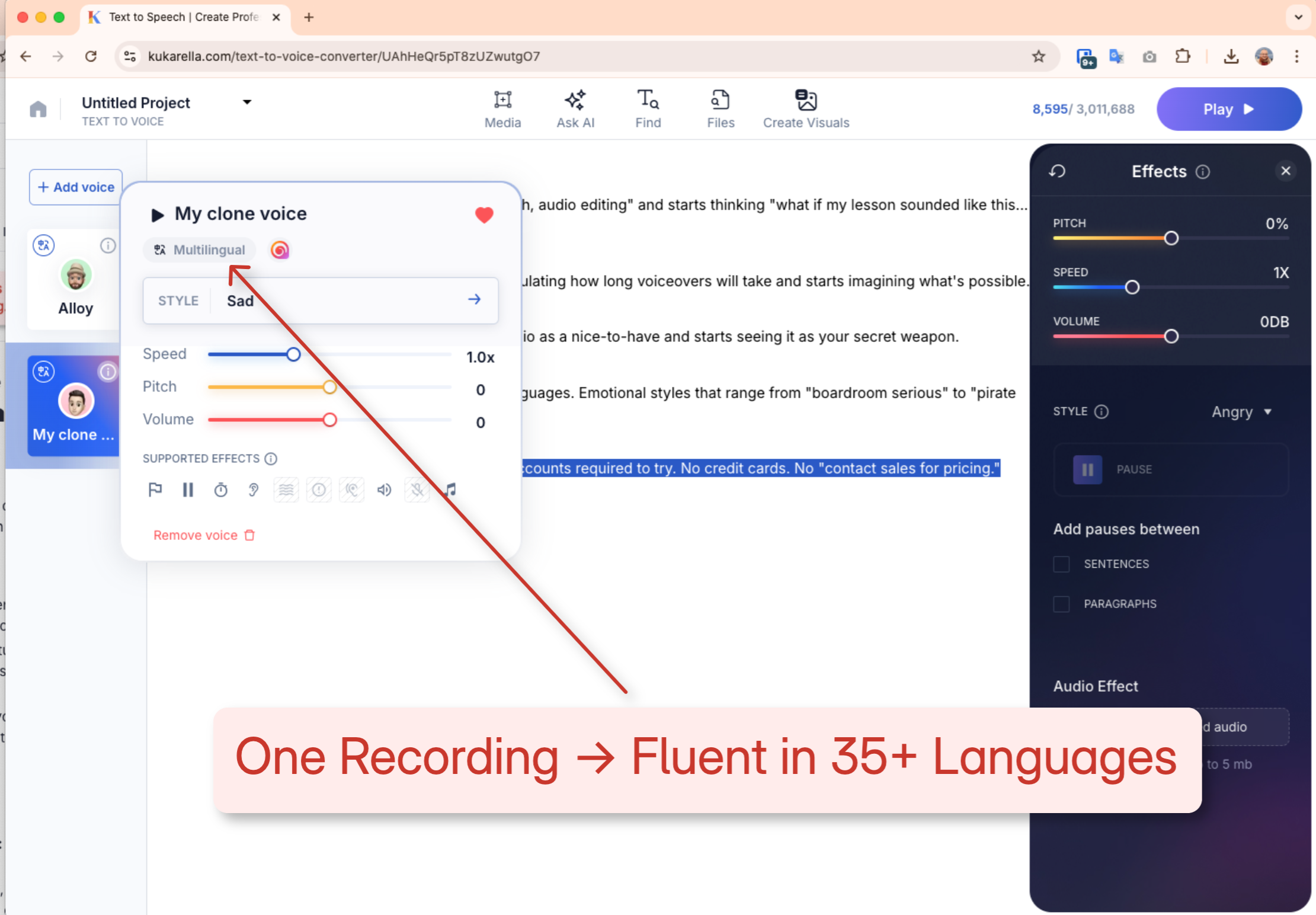

- Language and Accent Mismatch: If you record your sample in English but then generate speech in Spanish, the clone might have a noticeable English accent.

How to fix it:

- Use Phonetic Spelling and Custom Dictionaries: Many platforms allow you to provide phonetic spellings for tricky words.

- Master Your Punctuation: Use commas, periods, and line breaks strategically to create natural pauses and a more conversational rhythm.

- Record in the Target Language: For the most natural-sounding clone in a specific language, provide your sample audio in that same language.

- Leverage Kukarella's Multilingual Capabilities: Kukarella's voice cloning technology is inherently multilingual, capable of speaking approximately 35 languages from a single English voice sample. This is a significant advantage for creators targeting a global audience, as it eliminates the need to record separate samples for each language.

The Tool Ecosystem: A Comparative Look at Voice Cloning Platforms

The voice cloning market is brimming with options, each with its own strengths and weaknesses. Here's a look at some of the major players:

| Feature | Kukarella | ElevenLabs | Murf.ai | Play.ht |

| Robotic Sound Reduction | Excellent (Emotional Styles, Custom Styles) | Good (Stability & Clarity sliders) | Good (Pitch, Speed adjustments) | Fair (Can sound robotic) |

| Emotional Control | Excellent (Paragraph-level styles, custom styles) | Good (Requires careful prompting) | Good (Limited emotional tones) | Fair (Lacks nuanced emotional inflection |

| Multilingual Support | Excellent (~35 languages from one clone) | Good (29 languages) | Good (20+ languages) | Excellent (130+ languages) |

| Ease of Use | Excellent (Intuitive interface) | Good (Simplified interface) | Excellent (User-friendly) | Good (Can be tedious) |

| Unique Features | Create voices from text description, All-in-one platform (TTS, transcription, etc.) | Powerful voice cloning from short samples | Voice changer, Canva & Google Slides integration | Large library of pre-built voices |

Kukarella's Unique Position:

Kukarella stands out as an all-in-one platform that goes beyond simple voice cloning. It integrates text-to-speech, transcription, and even AI-powered content creation into a single workflow. This is particularly beneficial for users who want to streamline their entire content production process.

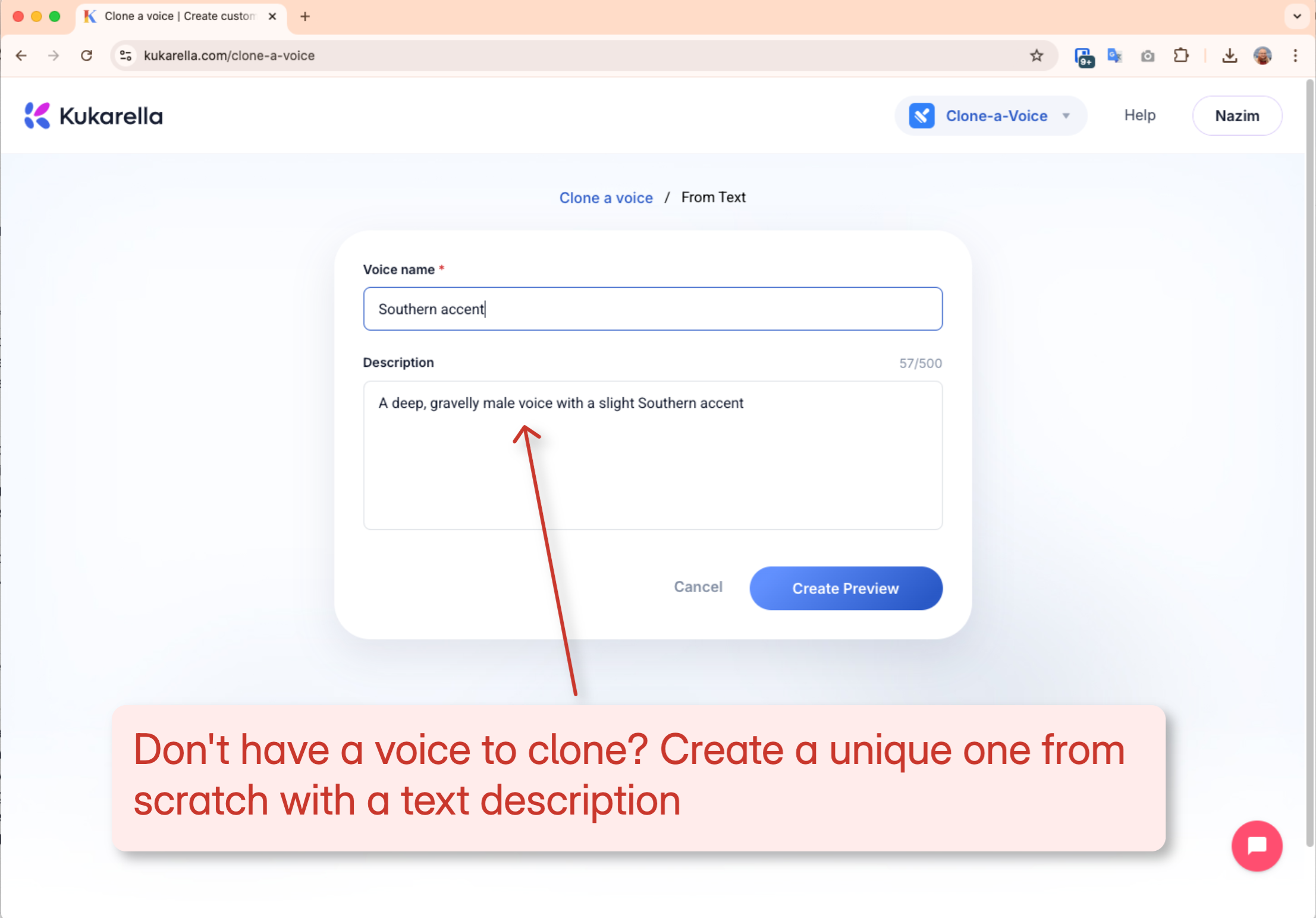

The ability to create a voice clone from a simple text description is a game-changer for those who may not have a specific voice to clone but want a unique voice for their brand or project. For instance, you could describe "a deep, gravelly male voice with a slight Southern accent," and Kukarella's AI will generate it for you. This unique feature sets it apart from competitors that solely rely on audio samples.

Advanced Strategies for Lifelike Voice Clones

For those looking to push the boundaries of voice cloning, here are some advanced techniques:

- Iterative Cloning: Don't be afraid to delete and re-clone your voice with improved audio samples. Many users find that their second or third attempt, after learning from the initial results, is significantly better.

- Combining Cloned and Synthetic Voices: Use your high-quality voice clone for primary narration and leverage a platform's library of synthetic voices for secondary characters or accents.

- Scripting for the Ear, Not the Eye: Write your scripts in a conversational style, using contractions and simpler sentence structures. Read your script aloud to identify any awkward phrasing before generating the audio.

- Leveraging API for Automation: For developers and businesses, using a platform's API can automate the process of generating voiceovers for dynamic content, such as personalized news briefings or real-time customer support responses.

Troubleshooting Edge Cases

What about those less common but equally frustrating problems?

- The Clone Sounds "Too Young" or Lacks "Raspiness": As mentioned, some AI models can over-correct for what they perceive as imperfections. If you have a naturally raspy or mature voice, try to emphasize those qualities in your sample recording. A Reddit user on r/ElevenLabs expressed this exact issue, noting their clone sounded "too pristine."

- Difficulty with Specific Accents: If the AI struggles with a particular accent, try providing a longer and more varied audio sample. Also, ensure the platform you're using has been trained on a diverse dataset of accents.

The Future of Voice Cloning: What's Next?

The field of voice cloning is evolving at a breakneck pace. We can expect to see:

- Hyper-Realism: AI models will become even better at capturing the subtle nuances of human speech, making it nearly impossible to distinguish between real and synthetic voices.

- Real-Time Voice Conversion: The ability to change your voice in real-time during live streams or calls will become more widespread and accessible.

- Enhanced Emotional Nuance: Future AIs will have a deeper understanding of emotional context, allowing for even more expressive and empathetic voice clones.

- Increased Personalization: Users will have even more granular control over the creation and modification of their digital voices.

Your Action Plan for Perfecting Your Voice Clone

- Start with a High-Quality Recording: This is the single most important step. Use a good microphone in a quiet environment.

- Record with Intention: Match your recording style and emotion to the intended use case of your voice clone.

- Choose the Right Platform: Evaluate different platforms based on their features, especially their ability to handle common issues like robotic tones and lack of emotion. Kukarella's emotional styles and multilingual capabilities make it a strong contender.

- Iterate and Refine: Don't be afraid to experiment. Try different audio samples, adjust settings, and re-clone your voice until you're satisfied with the result.

- Embrace Advanced Features: Explore your platform's advanced settings, such as custom pronunciations and paragraph-level style adjustments, to fine-tune your clone's performance.

By following this comprehensive guide, you'll be well-equipped to tackle any voice cloning challenge that comes your way, transforming your digital voice from a source of frustration into a powerful tool for engaging your audience.