A dramatic close-up photo showing a person's face split between human and a digital soundwave, symbolizing the concept of digital voice identity

A dramatic close-up photo showing a person's face split between human and a digital soundwave, symbolizing the concept of digital voice identityIn mid-2023, Zelda Williams, daughter of the beloved late actor Robin Williams, posted a message to her followers that sent a chill through the creative community. She spoke of AI-generated "recreations" of her father, calling the practice "personally disturbing" and the technology "Frankenstein's monster."

"I've witnessed for YEARS how many people want to train these models to be/sound like actors who are no longer with us," she wrote. "This isn't theoretical, it is very very real."

Her words crystallized a fear that has been simmering just beneath the surface of this revolutionary technology. The ability to clone a human voice is not merely a technical achievement; it is an act that touches the very core of our identity, legacy, and personal autonomy. The question is no longer can we do it, but should we? And under what circumstances?

This is not an abstract debate. In January 2024, a fake robocall using an AI-cloned voice of President Joe Biden urged New Hampshire residents not to vote in the primary. YouTuber Jeff Geerling publicly called out a company for cloning his voice without permission to market their own products. The ethical battleground is here, and every creator, business, and platform user is on it.

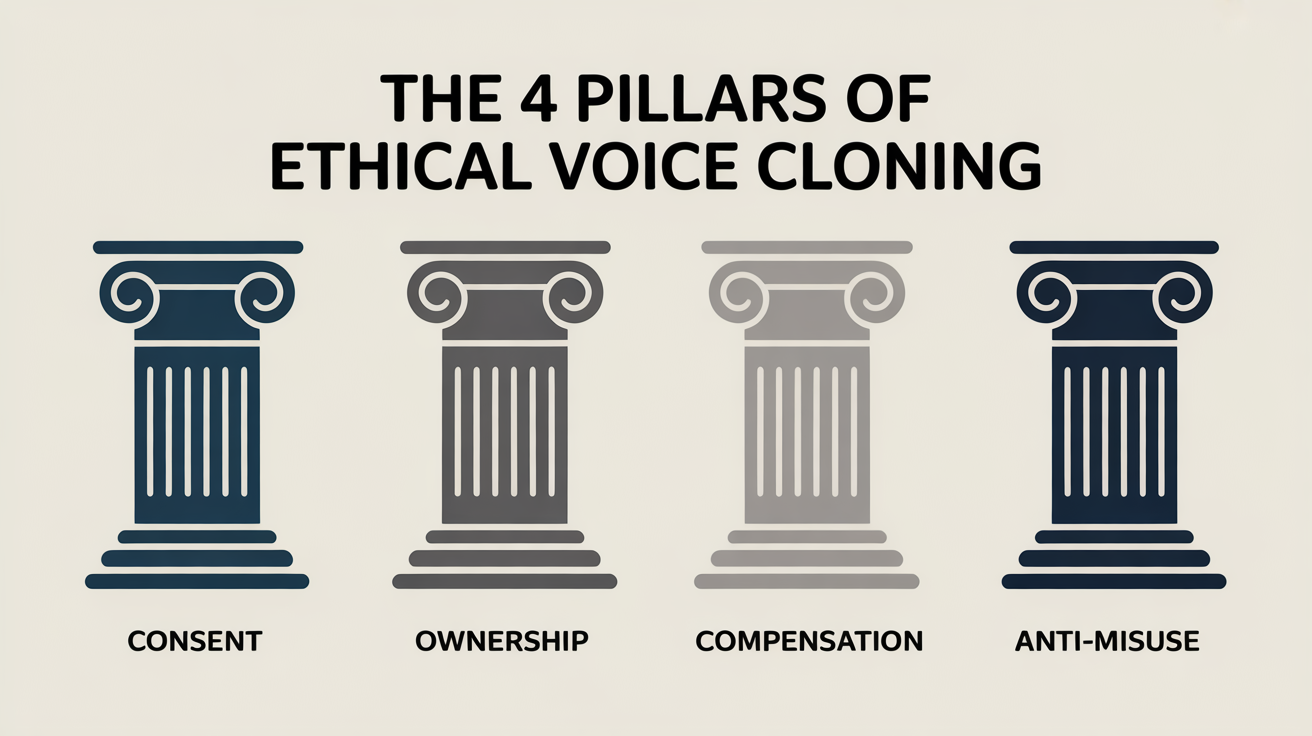

Ignoring the ethical and legal framework of voice cloning is not just irresponsible; it is a direct threat to your brand, your audience's trust, and your legal standing. This guide is an authoritative, no-nonsense look at the critical pillars of responsible voice cloning: Consent, Ownership, Compensation, and the fight against Misuse. This is your playbook for innovating with integrity.

Infographic showing four classical columns labeled "Consent," "Ownership," "Compensation," and "Anti-Misuse," representing the pillars of ethical voice cloning

Infographic showing four classical columns labeled "Consent," "Ownership," "Compensation," and "Anti-Misuse," representing the pillars of ethical voice cloningPillar 1: The Principle of Consent - The Unbreakable Foundation

This is the absolute, unequivocal starting point for all ethical voice cloning. Without explicit, informed consent from the person whose voice is being cloned, the act is an ethical violation. Period.

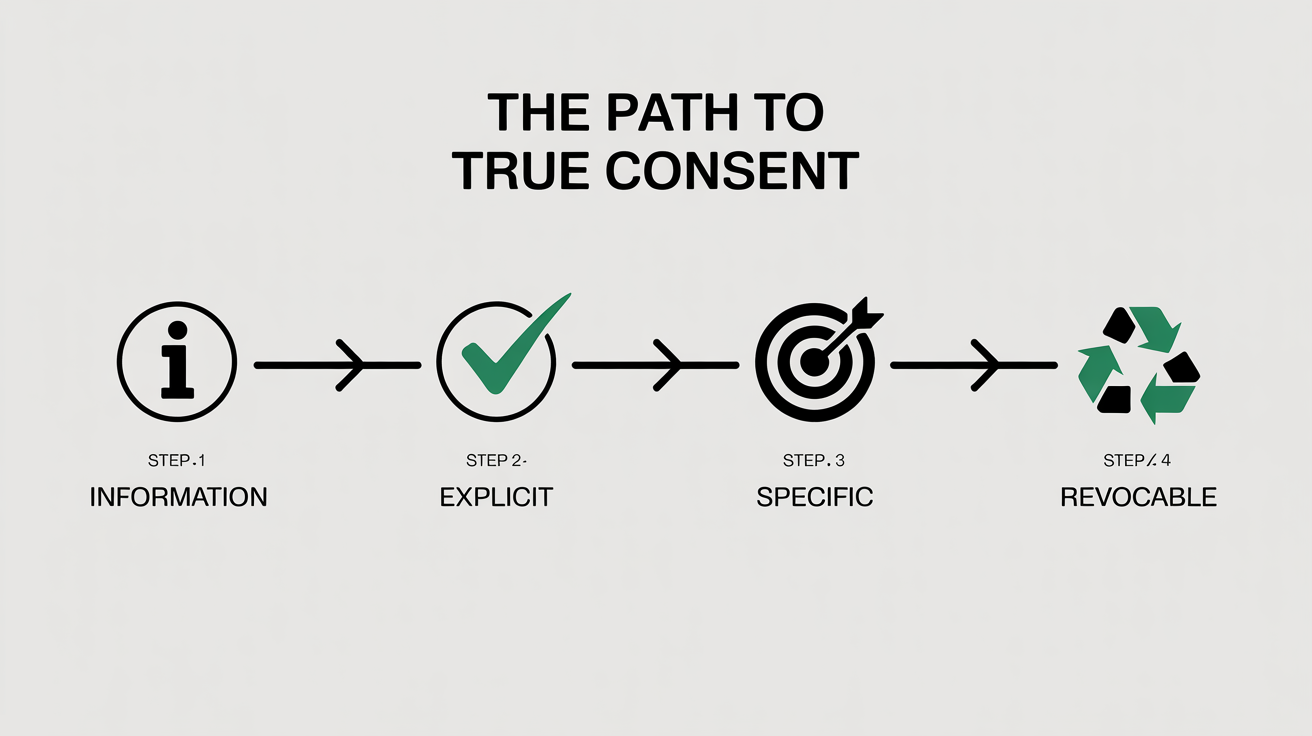

A vague clause buried in a 2,000-word Terms of Service does not constitute consent. True consent must be:

- Informed: The person must know exactly what they are agreeing to. How will the clone be used? For what purpose? In what contexts?

- Explicit: It must be a clear, opt-in action, not a pre-checked box or an assumption.

- Specific: Consent to clone a voice for an internal training video is not consent to use it in a national advertising campaign. The scope must be clearly defined.

- Revocable: The person must have a clear and simple way to withdraw their consent, with a corresponding guarantee that their voice clone and source data will be permanently deleted.

A flowchart outlining the four steps to true consent in voice cloning: Informed, Explicit, Specific, and Revocable.

A flowchart outlining the four steps to true consent in voice cloning: Informed, Explicit, Specific, and Revocable.The Gold Standard in Practice: When Lucasfilm and Respeecher decided to recreate the voice of a young Luke Skywalker, they worked directly with Mark Hamill. When they preserved the iconic voice of James Earl Jones, it was with his explicit permission and participation. This is the professional, ethical model: collaboration, not appropriation.

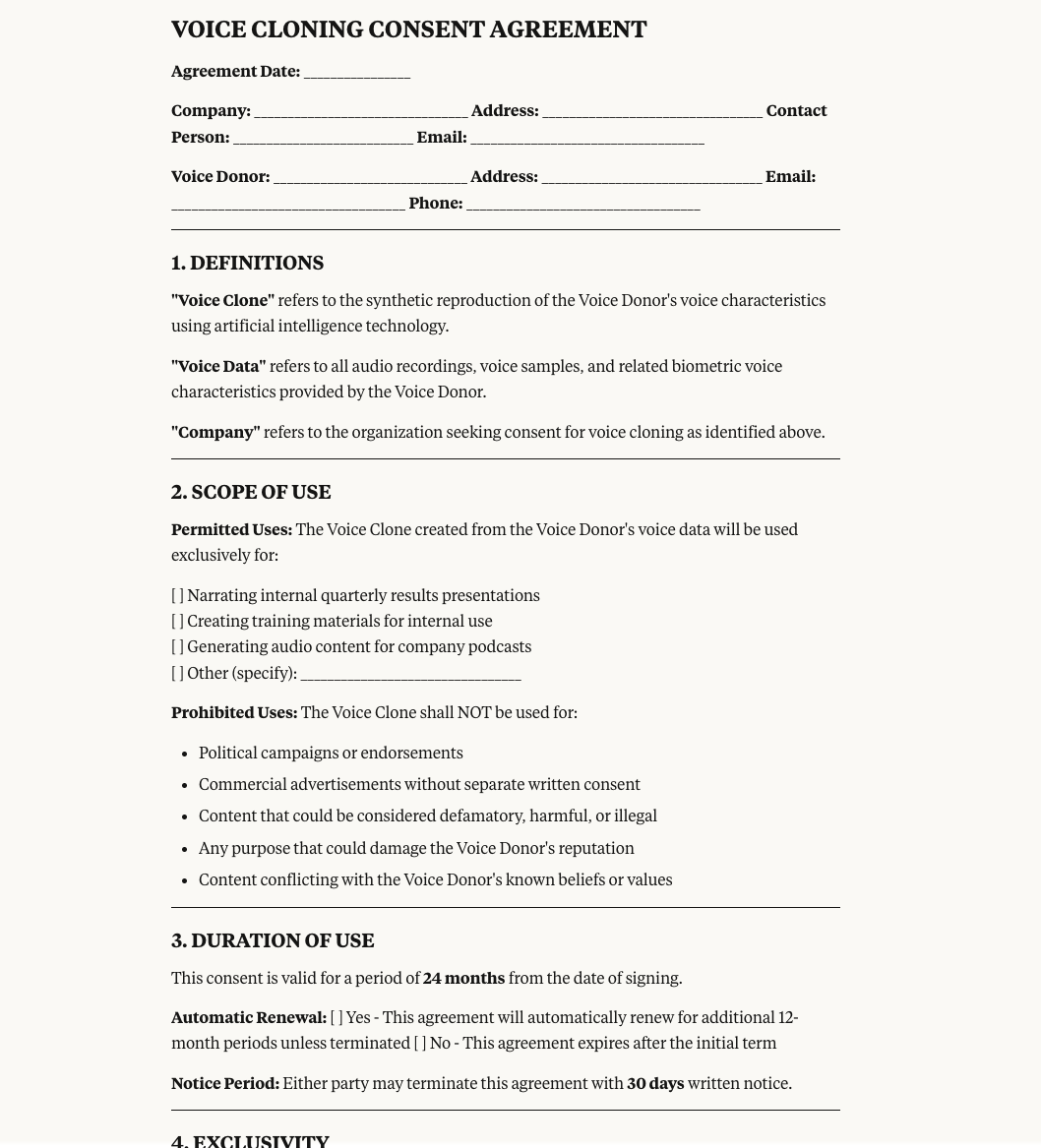

THE FINE PRINT: What a Real Consent Form Looks Like

A proper voice cloning consent agreement, drafted by legal counsel, should contain clear sections on:

- Scope of Use:("This voice clone will be used exclusively for narrating internal quarterly results presentations.")

- Duration of Use:("This consent is valid for a period of 24 months from the date of signing.")

- Exclusivity:(Will this clone be used only by your company, or can it be licensed to third parties?)

- Revocation Process:(How does the voice donor request deletion, and what is the company's guaranteed timeline for doing so?)

- Compensation:(Is there a one-time fee, a royalty, or is it part of a salaried role?)

VOICE CLONING CONSENT AGREEMENT

VOICE CLONING CONSENT AGREEMENTClick to see the full version of the Voice Cloning agreement

Pillar 2: The Question of Ownership - Who Holds the Digital Keys?

This is the most complex legal area in voice cloning, and where many platforms reveal their true intentions. Who owns the voice? The answer is nuanced.

- The Source Audio: The initial recording of your voice (the "voice sample") is your biometric data. You own it, just like you own your fingerprint.

- The AI Model: The complex file generated by the AI after training on your voice sample. This is the "clone" itself.

- The Generated Audio: The final MP3 or WAV files created by using the AI model to speak a script.

The ethical crisis arises when a platform's Terms of Service forces you to grant them ownership or an unrestricted license to the AI model derived from your voice.

The Red Flag: In early 2025, a firestorm erupted when users scrutinized the updated Terms of Service for the voice platform ElevenLabs. The terms included language granting the company a "perpetual, irrevocable, royalty-free, worldwide license" to use voice recordings and the models derived from them. This meant that even if a user deleted their account, the company could potentially continue using the technology created from their voice indefinitely.

This led to a swift backlash. Kukarella, for instance, publicly ended its partnership with ElevenLabs, citing these unacceptable terms and immediately transitioning to a new, privacy-first provider. This was a clear corporate action demonstrating that for responsible companies, user ownership is a non-negotiable principle. An ethical platform's stance must be that the user retains full ownership and control over their biometric voice data and the models created from it.

Platform Policies: Who Owns Your Voice?

| Platform | User Retains Full Ownership? | Perpetual License Claimed? | Clear Data Deletion Policy? |

| Kukarella | ✅ | ❌ | ✅ |

| ElevenLabs | ❌ | ✅ | ⚠️ |

| Resemble AI | ✅ | ❌ | ✅ |

FROM THE TRENCHES

"The likeness, the voice of a performer, is their identity. It’s their marketability. It’s not a piece of code that can just be licensed away in perpetuity. We are fighting for contractual language that ensures consent and fair compensation every time that voice is used, just as if the actor were in the booth."

Duncan Crabtree-Ireland, National Executive Director of SAG-AFTRA (the actors' union), during the 2023 strike negotiations.

Pillar 3: Monetization and Fair Compensation

As voice cloning becomes a viable alternative to hiring a voice actor for every session, new models of compensation are essential.

- For Voice Actors: The job is not just about speaking lines anymore. A modern voice actor's business model may include:

- Licensing Fees: Charging a client a fee to create a voice clone for a specific project.

- Usage Royalties: Receiving a small payment for every 1,000 words generated by their clone.

- Buyout Clauses: Offering a client the option to purchase perpetual rights to the clone for a significantly higher, one-time fee.

- For Commercial Use: If you are using a cloned voice for any commercial purpose (marketing, sales, paid products), you must have the legal right to do so. This means either using a fully-licensed stock AI voice, or having a clear commercial agreement with the person whose voice you cloned. Using a clone created under a "non-commercial" or "personal use" license for a business project is a breach of contract and can lead to legal action.

Pillar 4: The Battle Against Misuse - The Deepfake Dilemma

This is the "Frankenstein's monster" Zelda Williams spoke of. Malicious deepfakes and audio fraud are the dark side of this technology. The responsibility to combat this falls on two groups: platforms and users.

Platform Responsibility:

- Clear Policies: An ethical platform must have a zero-tolerance policy against creating clones for harassment, fraud, or unauthorized impersonation.

- Voice Authentication: Many platforms are implementing technology that requires users to read a randomly generated sentence to prove that the voice they are cloning is live and consenting, preventing someone from cloning a voice from a YouTube video.

- Audio Watermarking: Emerging technology allows for an inaudible "watermark" to be embedded in AI-generated audio, making it easier to trace its origin and verify its authenticity.

User Responsibility:

The defense "I just used it for a meme" will not hold up in court if it violates a person's "right of publicity"—their right to control the commercial use of their identity. Cloning a celebrity's voice to have them "endorse" a product is a clear violation.

PLOT TWIST:

A Secure Clone is Your Best Defense Against a Deepfake

This is a profoundly important, counter-intuitive concept. How can you prove that a recording of you saying something damaging is a fake?

The best defense is to have an indisputable log of all legitimate uses of your voice. If your voice clone is secured on a platform like Kukarella, which provides project history and an audit trail, you have a verifiable record. You can demonstrate that the fraudulent audio does not exist within your sanctioned ecosystem. In a world of digital impersonation, a secure, well-managed voice clone becomes more than a content tool; it becomes your alibi.

Frequently Asked Questions (FAQ)

Q: Can I clone a celebrity's voice for personal use, like a private joke?

A: Legally, this is a gray area, but ethically, it's a bad practice. It normalizes the act of using someone's identity without their consent. Furthermore, most reputable platforms will ban you for attempting to clone a voice from copyrighted material or a public figure you do not have the rights to.

Q: I'm a voice actor. Is AI going to take my job?

A: It is transforming your job. It will likely commoditize low-end work (e.g., simple IVR prompts). However, it creates new opportunities for high-end work: licensing your "perfect" voice as a premium AI model, earning royalties on its usage, and using your clone for tedious "punch-in" corrections, saving you hours of studio time.

Q: How can I protect my own voice from being cloned without my permission?

A: Be vigilant about the terms of service for any app or platform you use. Be wary of "fun" apps that ask you to record your voice. Most importantly, advocate for and use platforms that have clear, human-readable policies that guarantee you retain 100% ownership and control of your biometric data.

Q: What happens to my voice clone if the platform I use shuts down?

A: This is a critical question to ask before you sign up. An ethical platform should have a policy that guarantees the permanent deletion of all user data and voice models from their servers in the event of insolvency.

Your Responsibility Checklist: A Framework for Ethical Use

The power to replicate a human voice is immense. It carries with it a profound responsibility. By prioritizing ethics, championing clear consent, and demanding user ownership, we can ensure this technology fulfills its incredible potential as a tool for creativity and connection, not as the monster so many fear.