In early 2023, the world's biggest YouTube star, MrBeast (Jimmy Donaldson), conducted a massive experiment. He took his already viral videos and had them professionally dubbed into 11 different languages by human voice actors. The result? A colossal explosion in viewership. His Spanish channel alone rocketed to over 22 million subscribers in under a year. The strategy was a resounding success, proving a colossal, untapped global appetite for creator content. But it also came with a colossal price tag.

"I'm hiring voice actors in all these different languages to dub over my videos," MrBeast explained in a video analyzing his strategy. "We're spending a crazy amount of money."

This is the global creator's dilemma. The opportunity is infinite, but the traditional methods of seizing it—hiring, managing, and directing dozens of voice actors across multiple countries—are a logistical and financial nightmare. This expensive, time-consuming process has, until now, been the only way to break the language barrier without sounding like a soulless, robotic GPS.

But what if you could achieve the global reach of MrBeast without his nine-figure budget? What if you could clone your voice once and have it speak over 100 languages fluently, not in a generic robot voice, but with your own unique cadence and personality? This isn't a future-state prediction. It's a revolutionary capability available today, and it's positioned to become the single most important tool for anyone with a global ambition.

This report will dissect the strategies, ethics, and groundbreaking potential of multilingual voice cloning. We will move beyond the hype to provide a clear-eyed look at how this technology works, who is using it, and how it can be leveraged to execute a world-class global content strategy from a single laptop.

The Strategic Imperative: Why Speaking "Globish" Is No Longer Enough

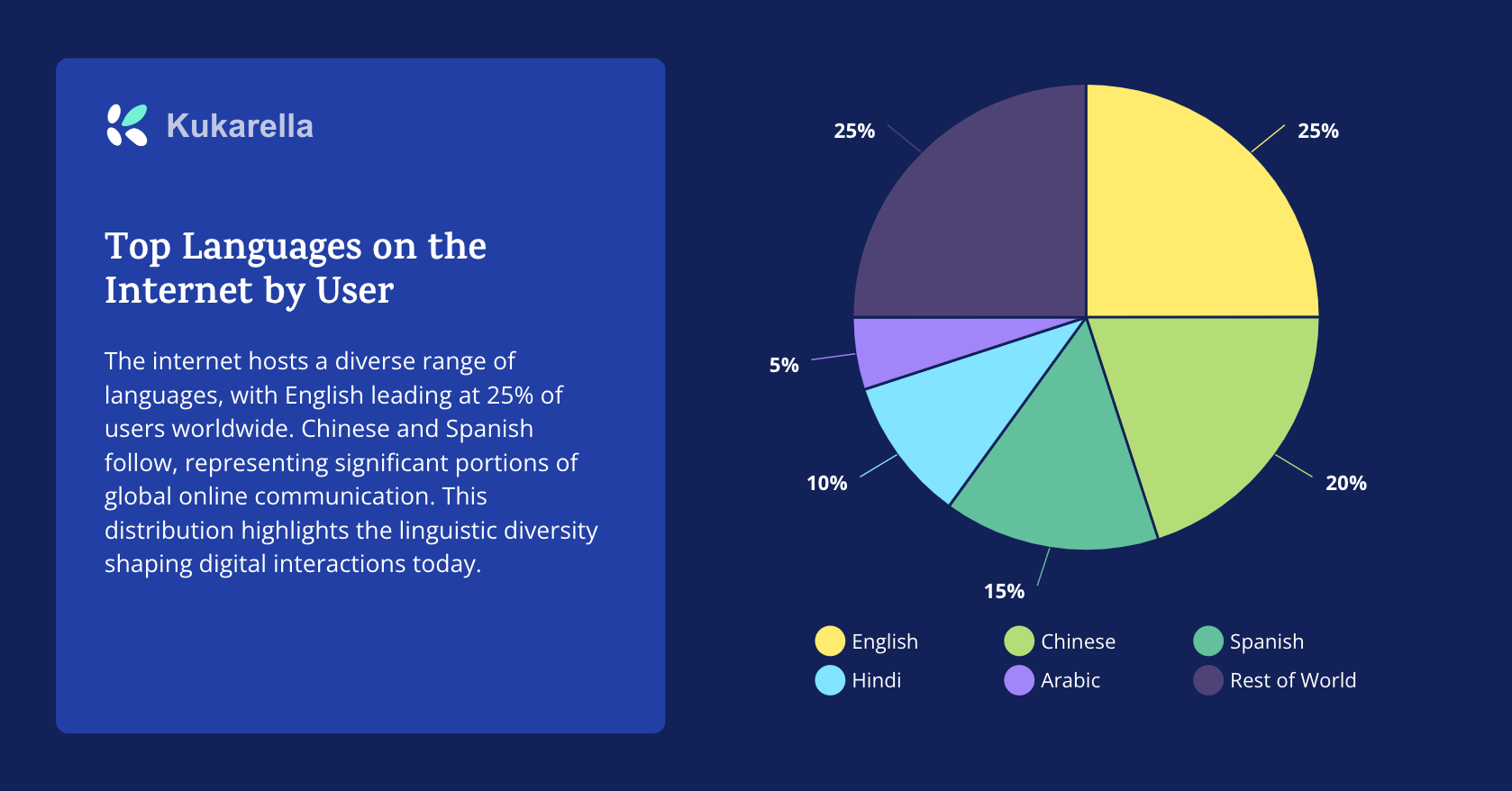

The Global Internet by Language

The Global Internet by LanguageFor decades, creating content primarily in English - or "Globish" - was a viable strategy. But the digital landscape has fractured into countless linguistic communities. The data is undeniable: a 2020 CSA Research study of 8,709 global consumers found that

- 76% prefer to buy products with information in their own language, and

- 40% will never buy from websites in other languages.

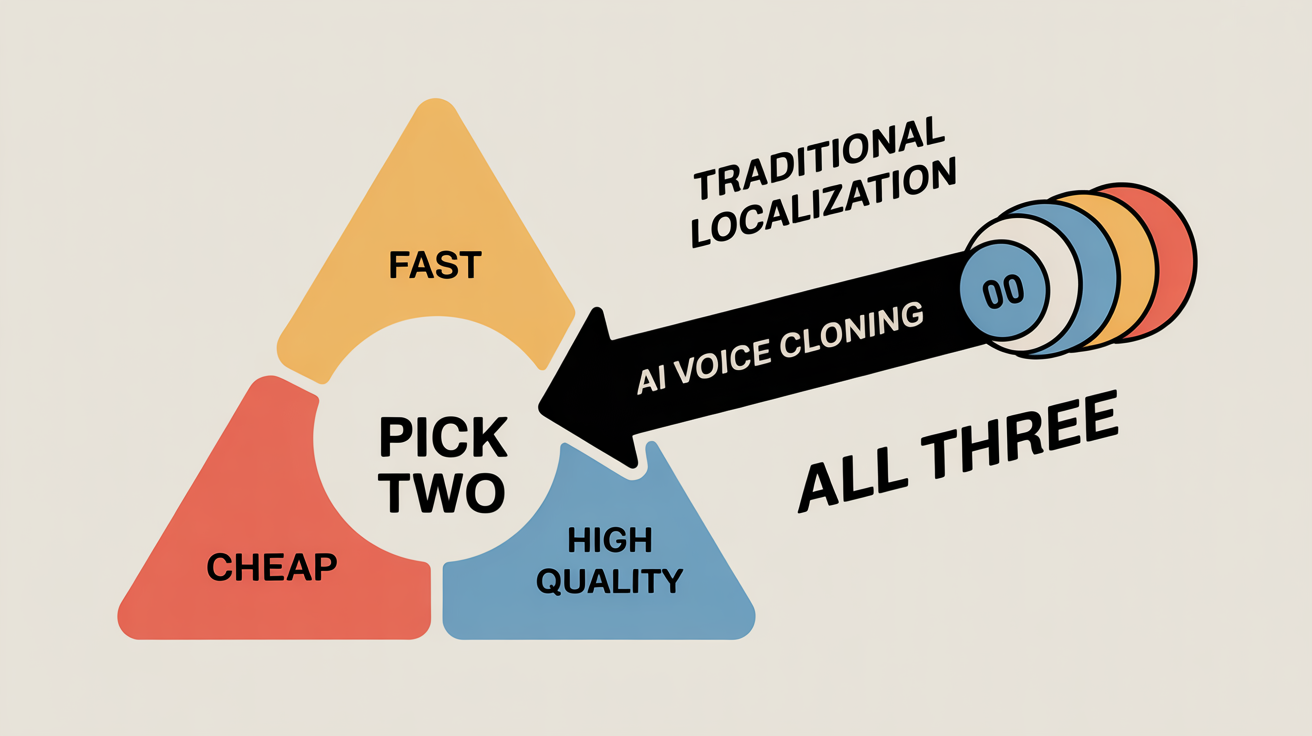

The old methods of reaching these audiences are fundamentally broken for the modern content economy:

Hiring Voice Actors: Expensive, slow, and creates brand inconsistency. Your brand has a different voice in every country.

Subtitles: Ineffective for passive consumption like podcasts, video played in the background, or for audiences with low literacy rates. They are an accessibility tool, not a true localization strategy.

Standard Text-to-Speech (TTS): Fast and cheap, but the robotic, inauthentic output actively damages brand perception and erodes audience trust.

Multilingual voice cloning shatters this trilemma. It offers the scalability of TTS, the authenticity of a human voice, and a level of brand consistency that was previously impossible.

FROM THE TRENCHES

"80% of the world doesn't speak English. That content is effectively decaying, it's not being watched. But if you're able to dub it, you're able to give it a new lease of life... We see that for every one language that you dub into, you see a 100% increase in watch time."

— Jesse Shemen, CEO of Papercup (an AI dubbing company), speaking to the Hollywood Reporter.

The strategic imperative is clear, and a new ecosystem of tools is emerging to meet the demand. While many companies offer AI voices, the specific ability to create a multilingual clone of a single voice is a more specialized field.

The Tool Ecosystem: Choosing Your Global Voice Partner

| Tool | Primary Focus | Multilingual Clone? | Key Differentiator | Best For |

| Kukarella | Integrated Content Suite | Yes (~35 Languages) | All-in-One Workflow. Combines cloning, script generation, translation, and emotional styling in a single platform. | Creators & businesses who need a complete "idea-to-global-content" solution. |

| ElevenLabs | High-Fidelity Voice Cloning | Yes (~29 Languages) | Raw Vocal Realism. Market leader in creating the most realistic-sounding voice replicas. | Audio purists and developers who prioritize vocal quality above all else and will build their own workflow. |

| Respeecher | Hollywood-Level Voice Conversion | Yes (Managed Service) | Voice-to-Voice Conversion. Can make one person's voice sound exactly like another's. | Major film studios with large budgets for projects like recreating a young actor's voice. |

| HeyGen / Synthesia | AI Video Avatars | Yes (for Avatars) | Video-First. The multilingual audio is tied to a digital avatar, not a portable audio file. | Corporate clients who need to create scalable, avatar-led training or marketing videos. |

Kukarella: Positions itself as an all-in-one content suite. Its key differentiator is the tight integration of a multilingual voice clone with an AI script assistant, dialogue tools, and visual generators. This makes it a strong choice for creators and businesses looking for a complete "idea-to-global-content" workflow within one platform. It supports a vast number of languages (over 130) from a single clone.

ElevenLabs: A major player known for its high-fidelity voice cloning technology. It also offers multilingual capabilities and is a powerful option for users who prioritize raw vocal quality above all else and are comfortable integrating that audio into a separate production workflow. Their platform is robust and a favorite among AI enthusiasts and developers.

Respeecher: This high-end platform specializes in "voice-to-voice" speech conversion, famously used to recreate the voice of a young Luke Skywalker in The Mandalorian and Darth Vader for Disney. While incredibly powerful for Hollywood-level projects, it is typically a managed service aimed at major studios, with a price point and complexity that place it outside the reach of most individual creators or businesses.

HeyGen / Synthesia: These platforms are leaders in AI video generation, focusing on creating AI avatars that speak scripts. While they offer multilingual audio, their core product is video, not a standalone, portable voice clone that can be used for podcasts or other audio-only media. They solve a different, though related, problem.

Understanding this landscape is key. The choice of tool depends entirely on the user's primary goal: Is it creating a standalone, versatile audio asset (Kukarella, ElevenLabs), producing a Hollywood film (Respeecher), or generating an avatar-led video (HeyGen)?

The New Playbook: Four High-Impact Strategies Using Multilingual Clones

This is how leading-edge creators and businesses are leveraging this technology right now.

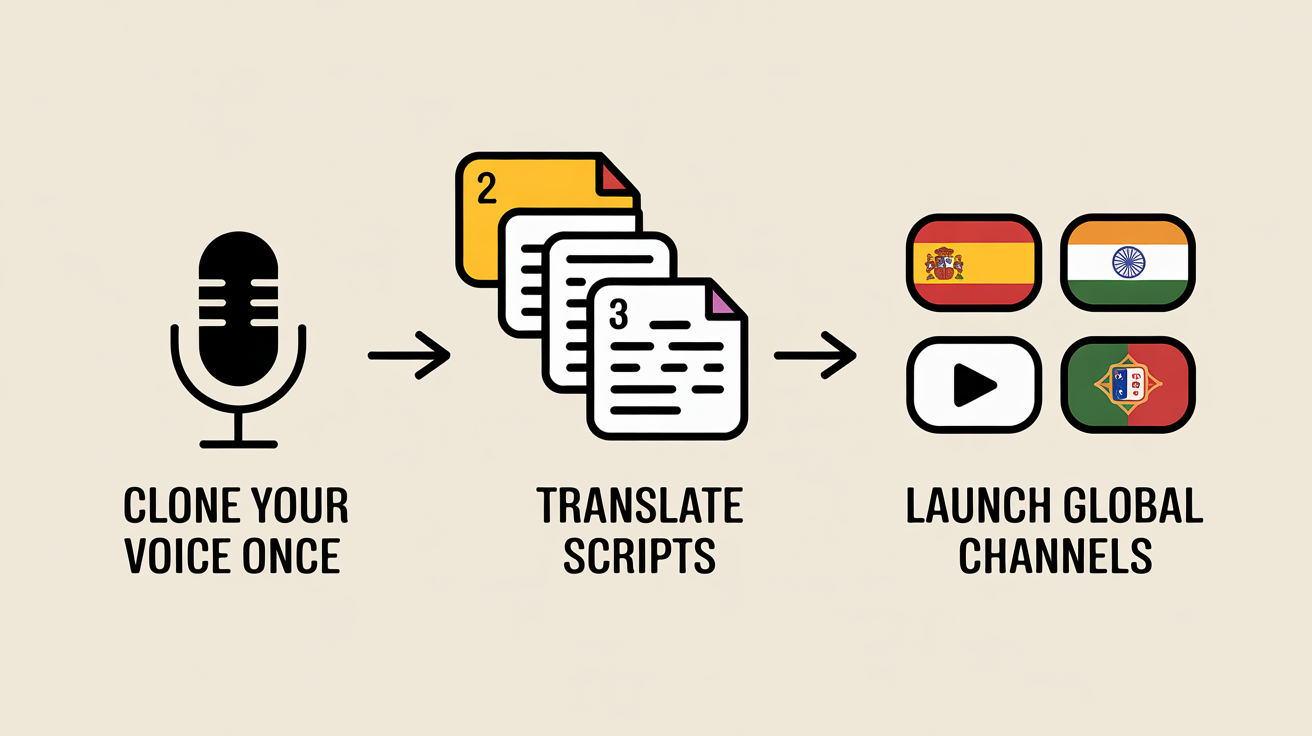

1. The "MrBeast Model on a Budget" for Creators

The Strategy: Achieve massive audience expansion by re-releasing your best content in multiple languages, using your own cloned voice to maintain authenticity.

Action: A creator clones their voice once on a platform like Kukarella. They identify their top 10 performing videos. They use an AI script translation service (or a human translator for higher nuance) to convert the scripts into Spanish, Chinese, Hindi, and Portuguese. They then generate new audio tracks for these videos using their own multilingual clone.

Result: Within a day, they can launch three new international YouTube channels. The new audience isn't hearing a stranger narrate; they are hearing the creator's own voice speaking their language. This preserves the crucial host-audience connection, the "parasocial relationship" that is the lifeblood of a creator's brand.

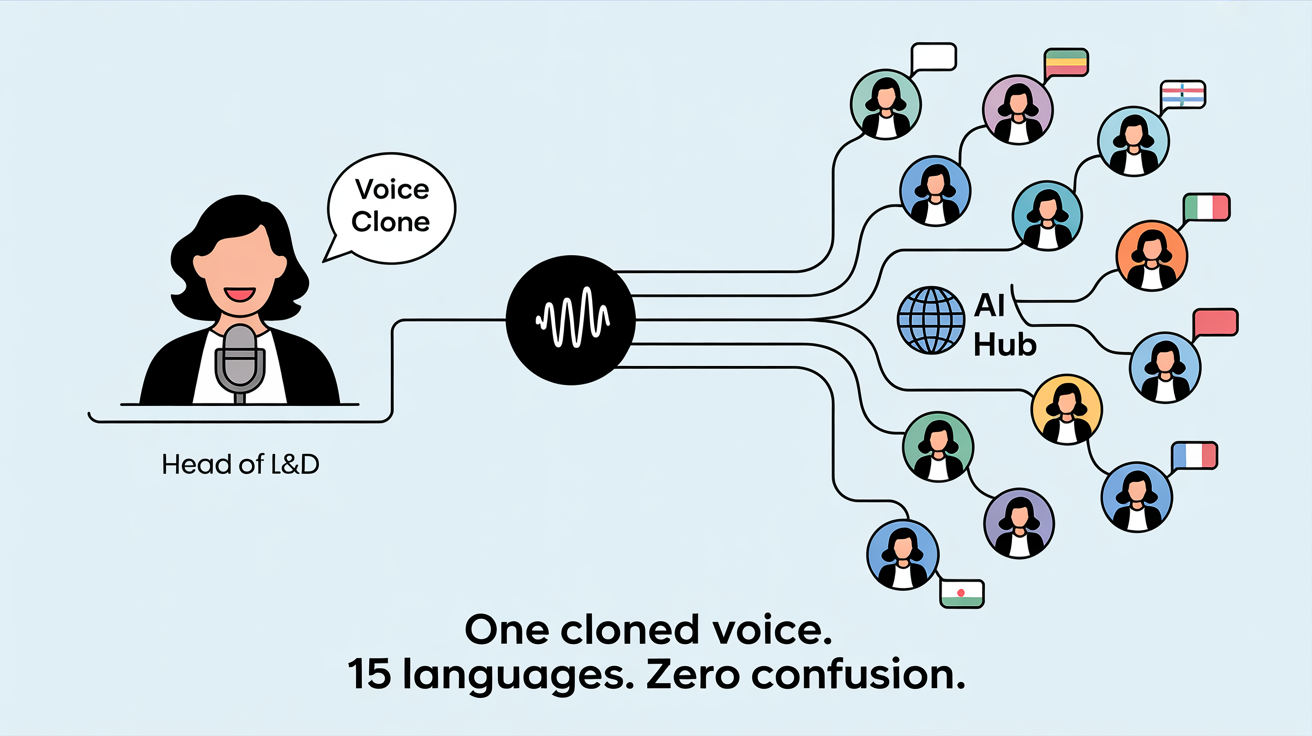

2. The Global L&D (Learning & Development) Rollout

The Strategy: A multinational corporation needs to deploy a mandatory cybersecurity training module to 5,000 employees across 15 countries.

The Old Way: A year-long project involving 15 different translation and voiceover teams, resulting in inconsistent messaging and massive costs.

The New Way:

The Head of L&D records the training narration in English. Her voice is cloned.

The script is translated into the 14 other required languages.

Her single voice clone generates the narration for all 15 versions of the course.

Result: The entire training module is localized in under a week. Every employee, from Tokyo to Berlin to São Paulo, hears the same authoritative, consistent instructions, ensuring universal comprehension of critical security protocols.

3. The Indie Filmmaker's Global Distribution

The Strategy: An independent filmmaker wants to submit their documentary to film festivals in South Korea, France, and Germany, but cannot afford professional dubbing.

Documented Use Case: While not for localization, the 2020 HBO documentary Welcome to Chechnya used AI-powered voice and face replacement to protect the identities of LGBTQ+ refugees. Director David France told IndieWire, "We were able to allow them to tell their own stories, in their own words, with their own voices, but without revealing who they are." This demonstrates the power of AI to manipulate voice while preserving the original emotional intent.

The Tactic: The filmmaker uses a multilingual clone to dub their film's narration. While it may not capture the nuance of a dramatic performance, it's more than sufficient for documentary narration, making the film accessible to festival selection committees who may not have time to read subtitles.

4. Real-Time News and Information Dissemination

The Strategy: A news organization needs to report on a breaking global health crisis and make the information immediately accessible worldwide.

Action: A health correspondent records a 2-minute update. Their cloned voice instantly generates the report in dozens of languages.

Result: A vital public health announcement is distributed globally across audio platforms and video channels within minutes, not days, potentially saving lives by breaking down language barriers in a crisis.

5. The Emotional Resonance Layer for Global Ad Campaigns

The Strategy: A brand wants to launch a global ad campaign for a new product. A single, flat narration will fall flat across different cultures. They need to tailor the emotional tone for each market.

The Old Way: Hiring separate directors and voice actors in each region to capture the right "feel"—an incredibly subjective and expensive process.

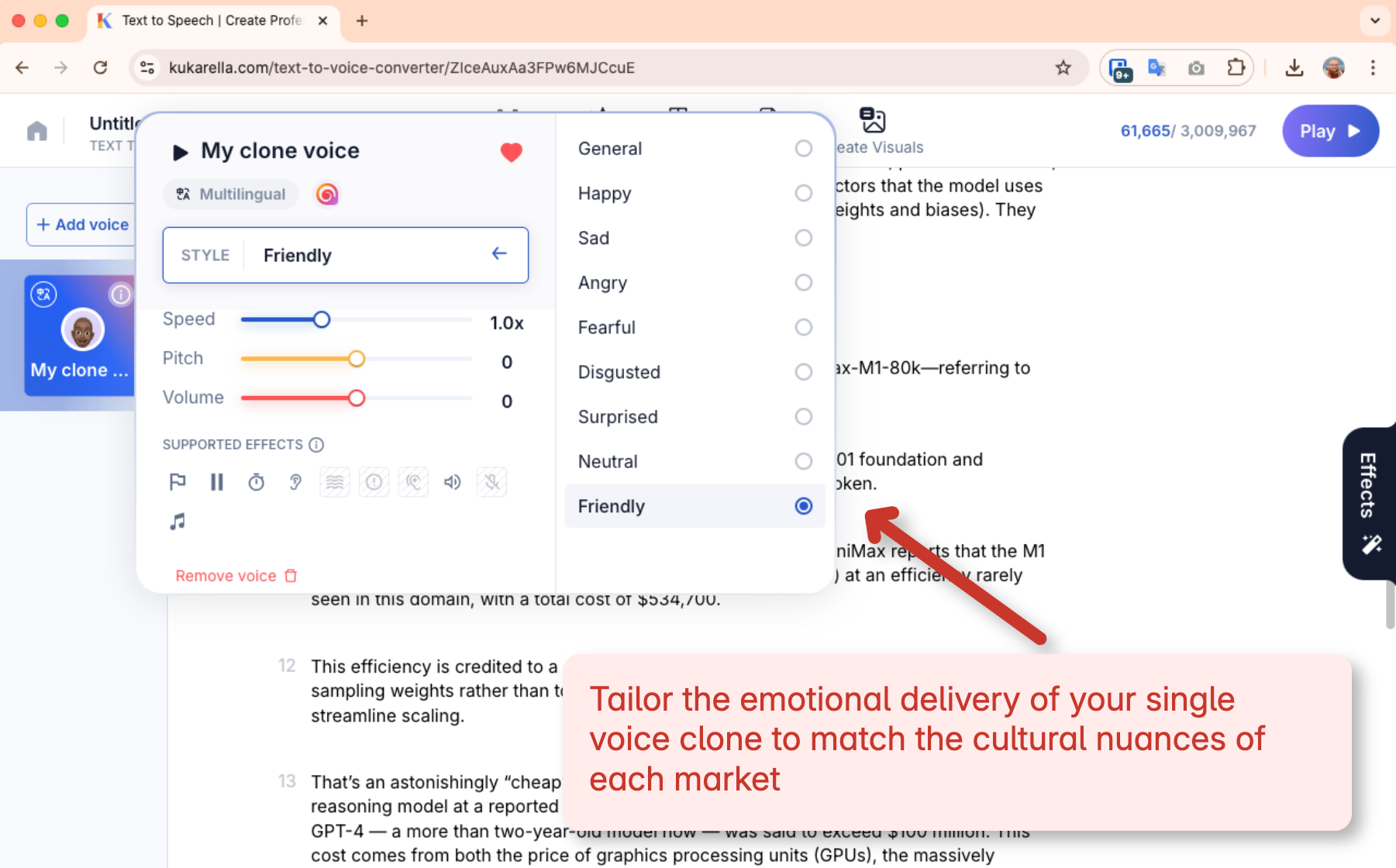

The Advanced Tactic: Use a multilingual clone that supports emotional styling. With Kukarella, a user can clone their brand's signature voice once. Then, for each language, they can apply different emotional styles.

For the UK Market: Generate the English audio with a "Friendly" or "Excited" style.

For the German Market: Perhaps a more formal "Newscast" or "Professional" style is more appropriate.

For a Youth-Oriented Campaign in Brazil: An "Upbeat" or energetic style in Portuguese.

Result: This is the pinnacle of authentic globalization. The brand maintains a single, consistent voice worldwide, but has the power to fine-tune the emotional performance to match cultural nuances and campaign goals. It allows a global brand to "speak like a local" in its delivery, not just its language, creating a far more resonant and effective campaign. This level of granular emotional control, combined with multilingual capabilities, is a strategic advantage that traditional methods cannot replicate at scale.

"Plot Twist" Moment: It’s Not About Translation, It’s About Trust

The revolutionary aspect of multilingual voice cloning isn’t just that it can speak Spanish. It's that it can speak Spanish sounding like you.

This is a fundamental shift from localization to what could be called "authentic globalization."

Your voice is a core component of your brand's sonic identity. It carries an implicit signature of trust, authority, and personality that your audience recognizes. When you replace it with a generic voice actor in a new market, you break that signature. The new audience hears the content, but they don't hear you.

A multilingual clone preserves this signature. The cadence, the pacing, the unique timber of your voice remains, even as the words change. This maintains brand consistency on a global scale and builds a bridge of trust with new audiences far more effectively than a disembodied, unfamiliar voice ever could. The goal isn't just to be understood; it's to be recognized and trusted.

Troubleshooting & Problem-Solving: The Global Nuance Guide

Q: "What happens to idioms, jokes, or cultural references? Will they translate correctly?"

A: No, and this is the most important limitation to understand. AI is a linguistic translator, not a cultural one. A phrase like "it's raining cats and dogs" will be translated literally, which will sound absurd in most other languages.

Solution: The "Human-in-the-Loop" workflow. After generating the translated script but before generating the audio, have a native-speaking freelancer do a quick "cultural review." For a 10-minute video script, this is often less than an hour of work on a platform like Upwork or Fiverr and is essential for avoiding embarrassing mistakes.

Q: "Will the AI clone have a perfect native accent in every language?"

A: It's more nuanced than that. The AI uses the phonetic rules of the target language, so the pronunciation of words will be native-perfect. However, it retains the original speaker's prosody—the rhythm, cadence, and intonation. This is actually a feature, not a bug. It makes the voice sound like a fluent, non-native speaker, which can be perceived as more authentic and less like a "deepfake."

Q: "How do I quality-check a 20-minute audio file in a language I don't speak?"

A: You don't. Your process needs to be front-loaded.

Trust the Script: Your primary quality control step is the cultural review of the text script. If the script is good, the audio will be good.

Spot Check: Have the native speaker listen to 30-60 seconds of the final audio to ensure the pacing and tone feel right. They don't need to listen to the whole thing.

"The Fine Print": The Ethical Tightrope of a Universal Voice

The Deepfake Dilemma: The same technology that can dub a training video can be used to create malicious deepfakes. This power comes with immense responsibility. It's critical to use platforms with strong terms of service that forbid impersonation and malicious use.

Consent and Identity: You can only clone a voice you own or have explicit, legal permission to use. Cloning a celebrity's voice for your own content is illegal and unethical. The "voice skin" belongs to the original person.

The Risk of Cultural Flattening: While powerful, there's a risk that relying solely on one cloned voice for all languages could erase the rich diversity of regional accents and dialects. It's a tool for scaling a single identity, not for capturing the full spectrum of a culture's voice.

Frequently Asked Questions (FAQ)

Q: Is this better than hiring a human voice actor?

A: It's different. A professional human actor can deliver a level of emotional performance for dramatic content that AI cannot yet match. But for 95% of use cases—tutorials, corporate narration, news updates, basic video voiceovers—AI cloning is faster, cheaper, and infinitely more scalable.

Q: What is the real cost?

A: Platforms like Kukarella operate on a subscription or credit-based model. The cost to generate an hour of audio is a tiny fraction of what a single human voice actor would charge for the same work in one language, let alone dozens.

Q: How many languages can one clone really speak?

A: This is platform-dependent. Kukarella's technology, for example, supports over 50 languages from a single cloned voice, making it one of the most powerful options on the market.

Q: What if a sentence contains multiple languages, like a mix of English and Spanish?

A: Advanced multilingual models can handle this automatically. They detect the language shift mid-sentence and pronounce each word correctly in its native tongue without any special formatting from the user.

You no longer have to choose between staying local and spending a fortune to go global. The language barrier has been broken. The only remaining question is: What will you say to the world?